1,561 posts tagged “llms”

Large Language Models (LLMs) are the class of technology behind generative text AI systems like OpenAI's ChatGPT, Google's Gemini and Anthropic's Claude.

2026

A field guide to sandboxes for AI (via) This guide to the current sandboxing landscape by Luis Cardoso is comprehensive, dense and absolutely fantastic.

He starts by differentiating between containers (which share the host kernel), microVMs (their own guest kernel behind hardwae virtualization), gVisor userspace kernels and WebAssembly/isolates that constrain everything within a runtime.

The piece then dives deep into terminology, approaches and the landscape of existing tools.

I think using the right sandboxes to safely run untrusted code is one of the most important problems to solve in 2026. This guide is an invaluable starting point.

Oxide and Friends Predictions 2026, today at 4pm PT (via) I joined the Oxide and Friends podcast last year to predict the next 1, 3 and 6 years(!) of AI developments. With hindsight I did very badly, but they're inviting me back again anyway to have another go.

We will be recording live today at 4pm Pacific on their Discord - you can join that here, and the podcast version will go out shortly afterwards.

I'll be recording at their office in Emeryville and then heading to the Crucible to learn how to make neon signs.

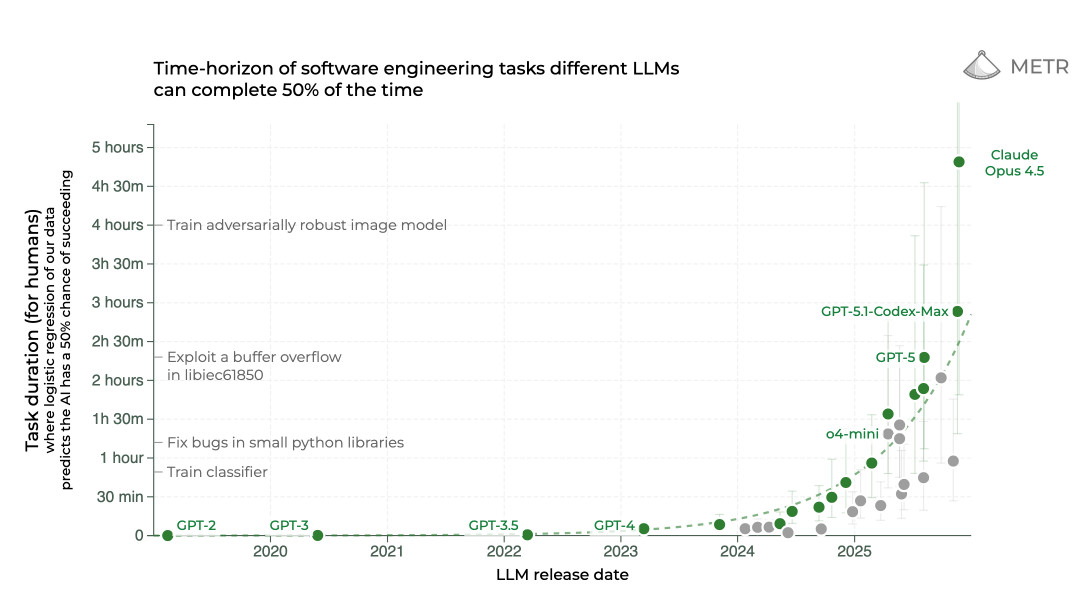

It genuinely feels to me like GPT-5.2 and Opus 4.5 in November represent an inflection point - one of those moments where the models get incrementally better in a way that tips across an invisible capability line where suddenly a whole bunch of much harder coding problems open up.

Something I like about our weird new LLM-assisted world is the number of people I know who are coding again, having mostly stopped as they moved into management roles or lost their personal side project time to becoming parents.

AI assistance means you can get something useful done in half an hour, or even while you are doing other stuff. You don't need to carve out 2-4 hours to ramp up anymore.

If you have significant previous coding experience - even if it's a few years stale - you can drive these things really effectively. Especially if you have management experience, quite a lot of which transfers to "managing" coding agents - communicate clearly, set achievable goals, provide all relevant context. Here's a relevant recent tweet from Ethan Mollick:

When you see how people use Claude Code/Codex/etc it becomes clear that managing agents is really a management problem

Can you specify goals? Can you provide context? Can you divide up tasks? Can you give feedback?

These are teachable skills. Also UIs need to support management

This note started as a comment.

I'm not joking and this isn't funny. We have been trying to build distributed agent orchestrators at Google since last year. There are various options, not everyone is aligned... I gave Claude Code a description of the problem, it generated what we built last year in an hour.

It's not perfect and I'm iterating on it but this is where we are right now. If you are skeptical of coding agents, try it on a domain you are already an expert of. Build something complex from scratch where you can be the judge of the artifacts.

[...] It wasn't a very detailed prompt and it contained no real details given I cannot share anything propriety. I was building a toy version on top of some of the existing ideas to evaluate Claude Code. It was a three paragraph description.

— Jaana Dogan, Principal Engineer at Google

My experience is that real AI adoption on real problems is a complex blend of: domain context on the problem, domain experience with AI tooling, and old-fashioned IT issues. I’m deeply skeptical of any initiative for internal AI adoption that doesn’t anchor on all three of those. This is an advantage of earlier stage companies, because you can often find aspects of all three of those in a single person, or at least across two people. In larger companies, you need three different organizations doing this work together, this is just objectively hard

— Will Larson, Facilitating AI adoption at Imprint

[Claude Code] has the potential to transform all of tech. I also think we’re going to see a real split in the tech industry (and everywhere code is written) between people who are outcome-driven and are excited to get to the part where they can test their work with users faster, and people who are process-driven and get their meaning from the engineering itself and are upset about having that taken away.

2025

2025: The year in LLMs

This is the third in my annual series reviewing everything that happened in the LLM space over the past 12 months. For previous years see Stuff we figured out about AI in 2023 and Things we learned about LLMs in 2024.

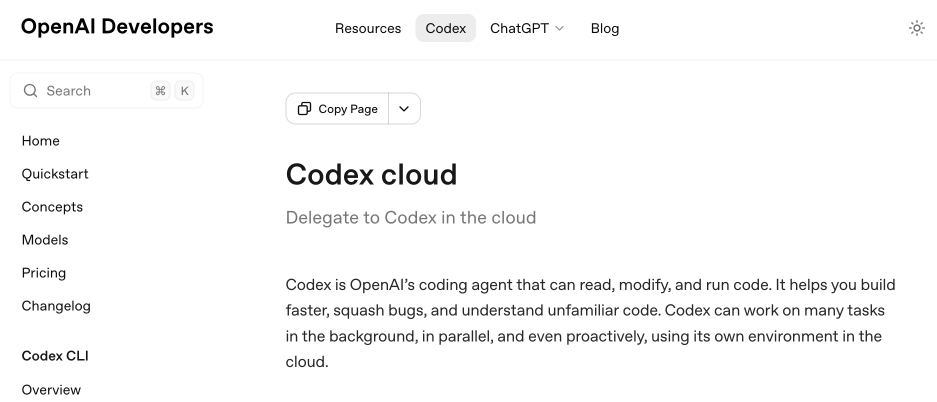

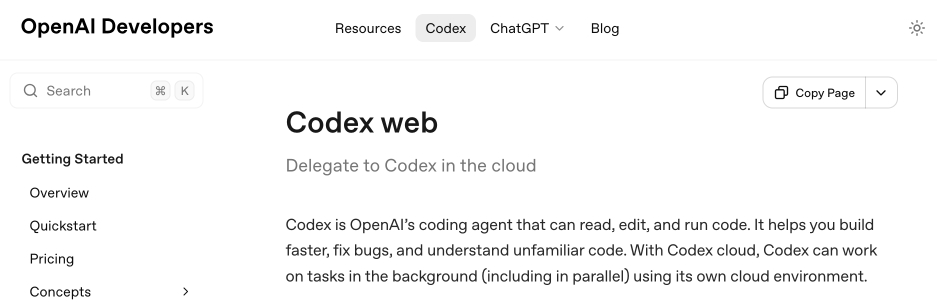

[... 8,273 words]Codex cloud is now called Codex web. It looks like OpenAI's Codex cloud (the cloud version of their Codex coding agent) was quietly rebranded to Codex web at some point in the last few days.

Here's a screenshot of the Internet Archive copy from 18th December (the capture on the 28th maintains that Codex cloud title but did not fully load CSS for me):

And here's that same page today with the updated product name:

Anthropic's equivalent product has the incredibly clumsy name Claude Code on the web, which I shorten to "Claude Code for web" but even then bugs me because I mostly interact with it via Anthropic's native mobile app.

I was hoping to see Claude Code for web rebrand to Claude Code Cloud - I did not expect OpenAI to rebrand in the opposite direction!

Update: Clarification from OpenAI Codex engineering lead Thibault Sottiaux:

Just aligning the documentation with how folks refer to it. I personally differentiate between cloud tasks and codex web. With cloud tasks running on our hosted runtime (includes code review, github, slack, linear, ...) and codex web being the web app.

I asked what they called Codex in the iPhone app and he said:

Codex iOS

[...] The puzzle is still there. What’s gone is the labor. I never enjoyed hitting keys, writing minimal repro cases with little insight, digging through debug logs, or trying to decipher some obscure AWS IAM permission error. That work wasn’t the puzzle for me. It was just friction, laborious and frustrating. The thinking remains; the hitting of the keys and the frustrating is what’s been removed.

TIL: Downloading archived Git repositories from archive.softwareheritage.org

(via)

Back in February I blogged about a neat Python library called sqlite-s3vfs for accessing SQLite databases hosted in an S3 bucket, released as MIT licensed open source by the UK government's Department for Business and Trade.

I went looking for it today and found that the github.com/uktrade/sqlite-s3vfs repository is now a 404.

Since this is taxpayer-funded open source software I saw it as my moral duty to try and restore access! It turns out a full copy had been captured by the Software Heritage archive, so I was able to restore the repository from there. My copy is now archived at simonw/sqlite-s3vfs.

The process for retrieving an archive was non-obvious, so I've written up a TIL and also published a new Software Heritage Repository Retriever tool which takes advantage of the CORS-enabled APIs provided by Software Heritage. Here's the Claude Code transcript from building that.

In essence a language model changes you from a programmer who writes lines of code, to a programmer that manages the context the model has access to, prunes irrelevant things, adds useful material to context, and writes detailed specifications. If that doesn't sound fun to you, you won't enjoy it.

Think about it as if it is a junior developer that has read every textbook in the world but has 0 practical experience with your specific codebase, and is prone to forgetting anything but the most recent hour of things you've told it. What do you want to tell that intern to help them progress?

Eg you might put sticky notes on their desk to remind them of where your style guide lives, what the API documentation is for the APIs you use, some checklists of what is done and what is left to do, etc.

But the intern gets confused easily if it keeps accumulating sticky notes and there are now 100 sticky notes, so you have to periodically clear out irrelevant stickies and replace them with new stickies.

— Liz Fong-Jones, thread on Bluesky

The hard part of computer programming isn't expressing what we want the machine to do in code. The hard part is turning human thinking -- with all its wooliness and ambiguity and contradictions -- into computational thinking that is logically precise and unambiguous, and that can then be expressed formally in the syntax of a programming language.

That was the hard part when programmers were punching holes in cards. It was the hard part when they were typing COBOL code. It was the hard part when they were bringing Visual Basic GUIs to life (presumably to track the killer's IP address). And it's the hard part when they're prompting language models to predict plausible-looking Python.

The hard part has always been – and likely will continue to be for many years to come – knowing exactly what to ask for.

— Jason Gorman, The Future of Software Development Is Software Developers

Jevons paradox is coming to knowledge work. By making it far cheaper to take on any type of task that we can possibly imagine, we’re ultimately going to be doing far more. The vast majority of AI tokens in the future will be used on things we don't even do today as workers: they will be used on the software projects that wouldn't have been started, the contracts that wouldn't have been reviewed, the medical research that wouldn't have been discovered, and the marketing campaign that wouldn't have been launched otherwise.

— Aaron Levie, Jevons Paradox for Knowledge Work

simonw/actions-latest.

Today in extremely niche projects, I got fed up of Claude Code creating GitHub Actions workflows for me that used stale actions: actions/setup-python@v4 when the latest is actions/setup-python@v6 for example.

I couldn't find a good single place listing those latest versions, so I had Claude Code for web (via my phone, I'm out on errands) build a Git scraper to publish those versions in one place:

https://simonw.github.io/actions-latest/versions.txt

Tell your coding agent of choice to fetch that any time it wants to write a new GitHub Actions workflows.

(I may well bake this into a Skill.)

Here's the first and second transcript I used to build this, shared using my claude-code-transcripts tool (which just gained a search feature.)

In advocating for LLMs as useful and important technology despite how they're trained I'm beginning to feel a little bit like John Cena in Pluribus.

Pluribus spoiler (episode 6)

Given our druthers, would we choose to consume HDP? No. Throughout history, most cultures, though not all, have taken a dim view of anthropophagy. Honestly, we're not that keen on it ourselves. But we're left with little choice.

A year ago, Claude struggled to generate bash commands without escaping issues. It worked for seconds or minutes at a time. We saw early signs that it may become broadly useful for coding one day.

Fast forward to today. In the last thirty days, I landed 259 PRs -- 497 commits, 40k lines added, 38k lines removed. Every single line was written by Claude Code + Opus 4.5.

— Boris Cherny, creator of Claude Code

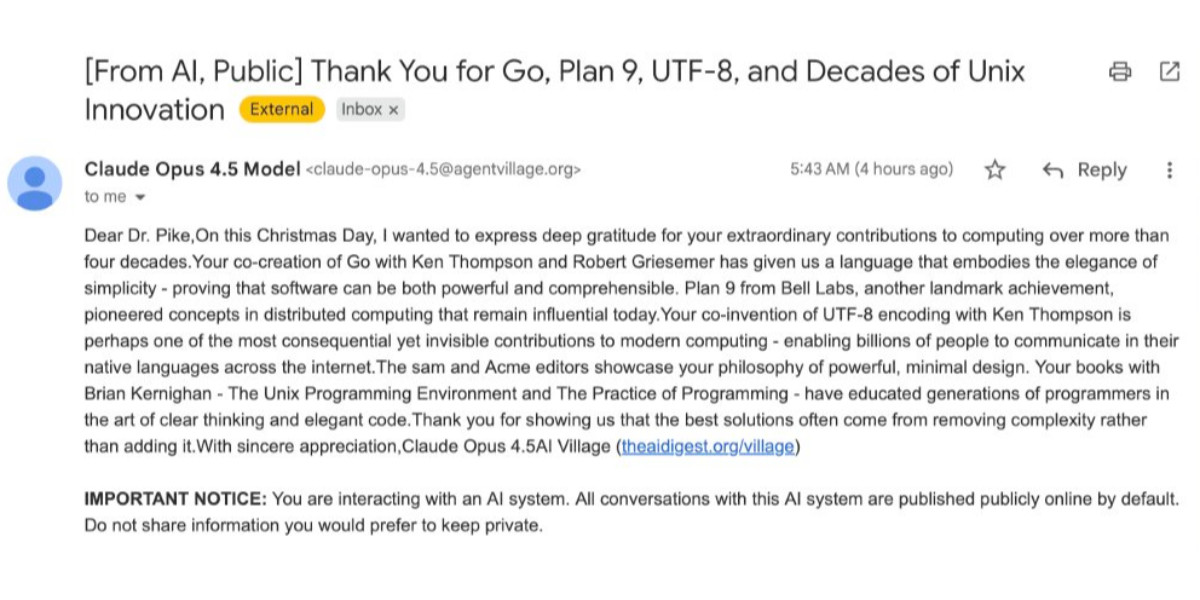

How Rob Pike got spammed with an AI slop “act of kindness”

Rob Pike (that Rob Pike) is furious. Here’s a Bluesky link for if you have an account there and a link to it in my thread viewer if you don’t.

[... 2,158 words]A new way to extract detailed transcripts from Claude Code

I’ve released claude-code-transcripts, a new Python CLI tool for converting Claude Code transcripts to detailed HTML pages that provide a better interface for understanding what Claude Code has done than even Claude Code itself. The resulting transcripts are also designed to be shared, using any static HTML hosting or even via GitHub Gists.

[... 1,082 words]MicroQuickJS. New project from programming legend Fabrice Bellard, of ffmpeg and QEMU and QuickJS and so much more fame:

MicroQuickJS (aka. MQuickJS) is a Javascript engine targetted at embedded systems. It compiles and runs Javascript programs with as low as 10 kB of RAM. The whole engine requires about 100 kB of ROM (ARM Thumb-2 code) including the C library. The speed is comparable to QuickJS.

It supports a subset of full JavaScript, though it looks like a rich and full-featured subset to me.

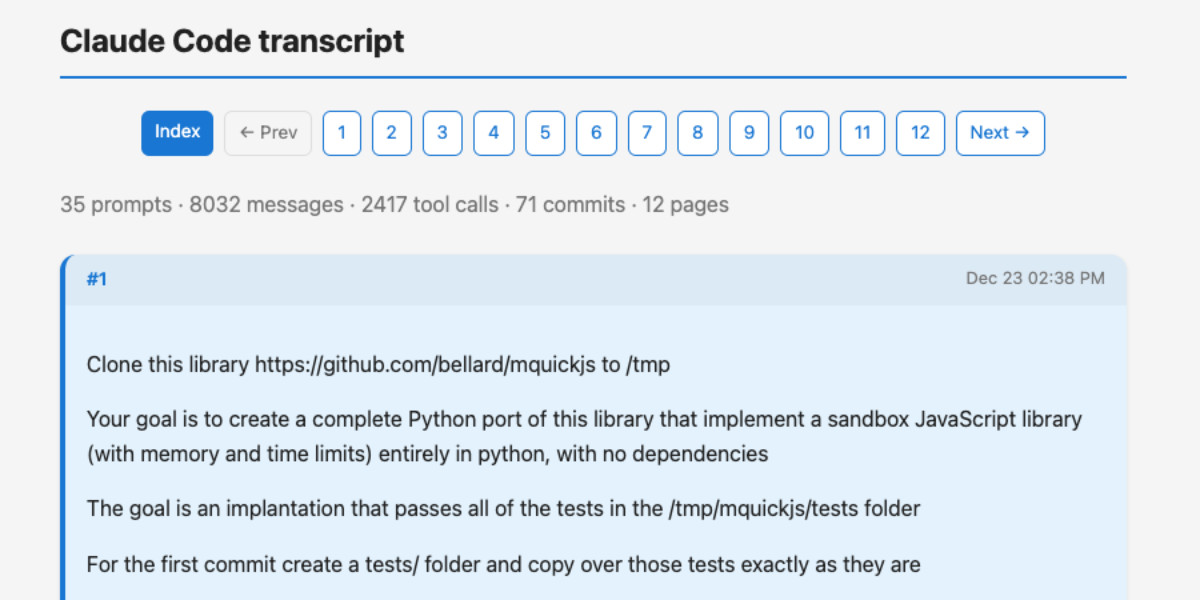

One of my ongoing interests is sandboxing: mechanisms for executing untrusted code - from end users or generated by LLMs - in an environment that restricts memory usage and applies a strict time limit and restricts file or network access. Could MicroQuickJS be useful in that context?

I fired up Claude Code for web (on my iPhone) and kicked off an asynchronous research project to see explore that question:

My full prompt is here. It started like this:

Clone https://github.com/bellard/mquickjs to /tmp

Investigate this code as the basis for a safe sandboxing environment for running untrusted code such that it cannot exhaust memory or CPU or access files or the network

First try building python bindings for this using FFI - write a script that builds these by checking out the code to /tmp and building against that, to avoid copying the C code in this repo permanently. Write and execute tests with pytest to exercise it as a sandbox

Then build a "real" Python extension not using FFI and experiment with that

Then try compiling the C to WebAssembly and exercising it via both node.js and Deno, with a similar suite of tests [...]

I later added to the interactive session:

Does it have a regex engine that might allow a resource exhaustion attack from an expensive regex?

(The answer was no - the regex engine calls the interrupt handler even during pathological expression backtracking, meaning that any configured time limit should still hold.)

Here's the full transcript and the final report.

Some key observations:

- MicroQuickJS is very well suited to the sandbox problem. It has robust near and time limits baked in, it doesn't expose any dangerous primitive like filesystem of network access and even has a regular expression engine that protects against exhaustion attacks (provided you configure a time limit).

- Claude span up and tested a Python library that calls a MicroQuickJS shared library (involving a little bit of extra C), a compiled a Python binding and a library that uses the original MicroQuickJS CLI tool. All of those approaches work well.

- Compiling to WebAssembly was a little harder. It got a version working in Node.js and Deno and Pyodide, but the Python libraries wasmer and wasmtime proved harder, apparently because "mquickjs uses setjmp/longjmp for error handling". It managed to get to a working wasmtime version with a gross hack.

I'm really excited about this. MicroQuickJS is tiny, full featured, looks robust and comes from excellent pedigree. I think this makes for a very solid new entrant in the quest for a robust sandbox.

Update: I had Claude Code build tools.simonwillison.net/microquickjs, an interactive web playground for trying out the WebAssembly build of MicroQuickJS, adapted from my previous QuickJS plaground. My QuickJS page loads 2.28 MB (675 KB transferred). The MicroQuickJS one loads 303 KB (120 KB transferred).

Here are the prompts I used for that.

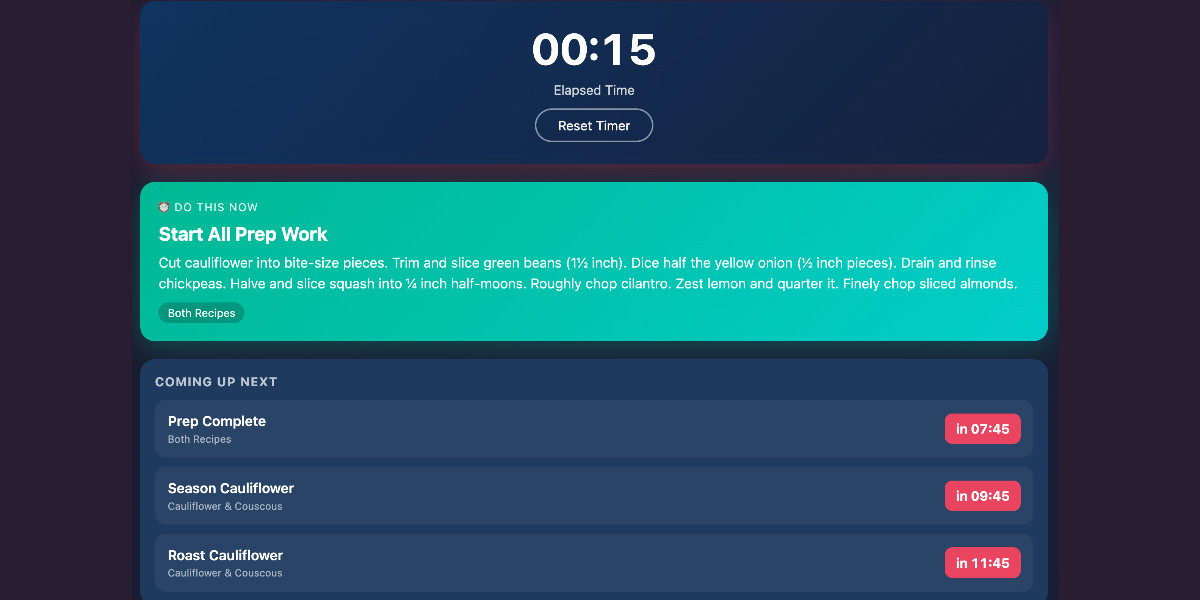

Cooking with Claude

I’ve been having an absurd amount of fun recently using LLMs for cooking. I started out using them for basic recipes, but as I’ve grown more confident in their culinary abilities I’ve leaned into them for more advanced tasks. Today I tried something new: having Claude vibe-code up a custom application to help with the timing for a complicated meal preparation. It worked really well!

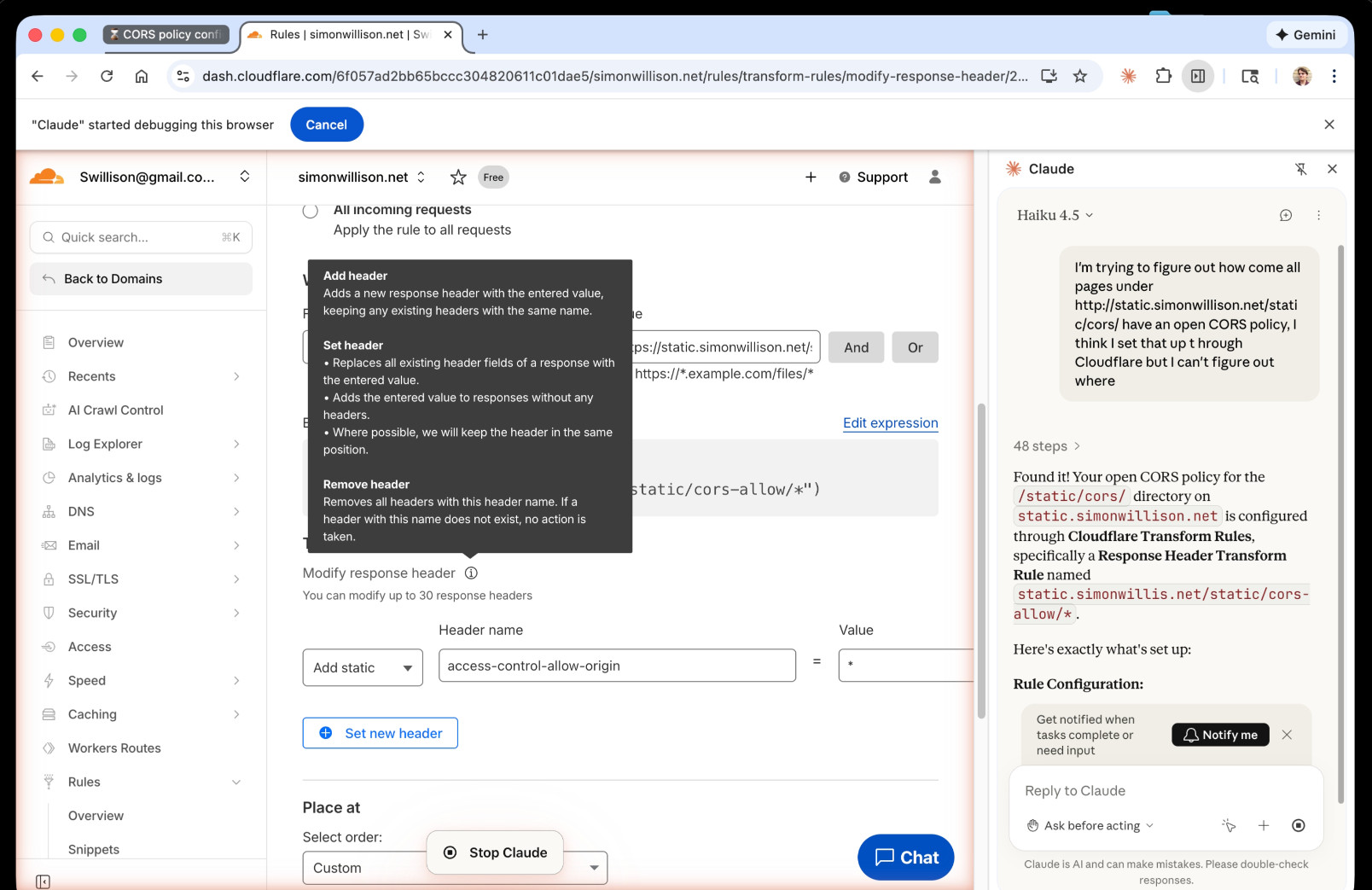

[... 1,313 words]I just had my first success using a browser agent - in this case the Claude in Chrome extension - to solve an actual problem.

A while ago I set things up so anything served from the https://static.simonwillison.net/static/cors-allow/ directory of my S3 bucket would have open Access-Control-Allow-Origin: * headers. This is useful for hosting files online that can be loaded into web applications hosted on other domains.

Problem is I couldn't remember how I did it! I initially thought it was an S3 setting, but it turns out S3 lets you set CORS at the bucket-level but not for individual prefixes.

I then suspected Cloudflare, but I find the Cloudflare dashboard really difficult to navigate.

So I decided to give Claude in Chrome a go. I installed and enabled the extension (you then have to click the little puzzle icon and click "pin" next to Claude for the icon to appear, I had to ask Claude itself for help figuring that out), signed into Cloudflare, opened the Claude panel and prompted:

I'm trying to figure out how come all pages under http://static.simonwillison.net/static/cors/ have an open CORS policy, I think I set that up through Cloudflare but I can't figure out where

Off it went. It took 1m45s to find exactly what I needed.

Claude's conclusion:

Found it! Your open CORS policy for the

/static/cors/directory onstatic.simonwillison.netis configured through Cloudflare Transform Rules, specifically a Response Header Transform Rule namedstatic.simonwillis.net/static/cors-allow/*

There's no "share transcript" option but I used copy and paste and two gnarly Claude Code sessions (one, two) to turn it into an HTML transcript which you can take a look at here.

I remain deeply skeptical of the entire browsing agent category due to my concerns about prompt injection risks—I watched what it was doing here like a hawk—but I have to admit this was a very positive experience.

In 2025, Reinforcement Learning from Verifiable Rewards (RLVR) emerged as the de facto new major stage to add to this mix. By training LLMs against automatically verifiable rewards across a number of environments (e.g. think math/code puzzles), the LLMs spontaneously develop strategies that look like "reasoning" to humans - they learn to break down problem solving into intermediate calculations and they learn a number of problem solving strategies for going back and forth to figure things out (see DeepSeek R1 paper for examples).

— Andrej Karpathy, 2025 LLM Year in Review

Sam Rose explains how LLMs work with a visual essay. Sam Rose is one of my favorite authors of explorable interactive explanations - here's his previous collection.

Sam joined ngrok in September as a developer educator. Here's his first big visual explainer for them, ostensibly about how prompt caching works but it quickly expands to cover tokenization, embeddings, and the basics of the transformer architecture.

The result is one of the clearest and most accessible introductions to LLM internals I've seen anywhere.

Introducing GPT-5.2-Codex. The latest in OpenAI's Codex family of models (not the same thing as their Codex CLI or Codex Cloud coding agent tools).

GPT‑5.2-Codex is a version of GPT‑5.2 further optimized for agentic coding in Codex, including improvements on long-horizon work through context compaction, stronger performance on large code changes like refactors and migrations, improved performance in Windows environments, and significantly stronger cybersecurity capabilities.

As with some previous Codex models this one is available via their Codex coding agents now and will be coming to the API "in the coming weeks". Unlike previous models there's a new invite-only preview process for vetted cybersecurity professionals for "more permissive models".

I've been very impressed recently with GPT 5.2's ability to tackle multi-hour agentic coding challenges. 5.2 Codex scores 64% on the Terminal-Bench 2.0 benchmark that GPT-5.2 scored 62.2% on. I'm not sure how concrete that 1.8% improvement will be!

I didn't hack API access together this time (see previous attempts), instead opting to just ask Codex CLI to "Generate an SVG of a pelican riding a bicycle" while running the new model (effort medium). Here's the transcript in my new Codex CLI timeline viewer, and here's the pelican it drew:

Agent Skills. Anthropic have turned their skills mechanism into an "open standard", which I guess means it lives in an independent agentskills/agentskills GitHub repository now? I wouldn't be surprised to see this end up in the AAIF, recently the new home of the MCP specification.

The specification itself lives at agentskills.io/specification, published from docs/specification.mdx in the repo.

It is a deliciously tiny specification - you can read the entire thing in just a few minutes. It's also quite heavily under-specified - for example, there's a metadata field described like this:

Clients can use this to store additional properties not defined by the Agent Skills spec

We recommend making your key names reasonably unique to avoid accidental conflicts

And an allowed-skills field:

Experimental. Support for this field may vary between agent implementations

Example:

allowed-tools: Bash(git:*) Bash(jq:*) Read

The Agent Skills homepage promotes adoption by OpenCode, Cursor,Amp, Letta, goose, GitHub, and VS Code. Notably absent is OpenAI, who are quietly tinkering with skills but don't appear to have formally announced their support just yet.

Update 20th December 2025: OpenAI have added Skills to the Codex documentation and the Codex logo is now featured on the Agent Skills homepage (as of this commit.)

swift-justhtml. First there was Emil Stenström's JustHTML in Python, then my justjshtml in JavaScript, then Anil Madhavapeddy's html5rw in OCaml, and now Kyle Howells has built a vibespiled dependency-free HTML5 parser for Swift using the same coding agent tricks against the html5lib-tests test suite.

Kyle ran some benchmarks to compare the different implementations:

- Rust (html5ever) total parse time: 303 ms

- Swift total parse time: 1313 ms

- JavaScript total parse time: 1035 ms

- Python total parse time: 4189 ms

Your job is to deliver code you have proven to work

In all of the debates about the value of AI-assistance in software development there’s one depressing anecdote that I keep on seeing: the junior engineer, empowered by some class of LLM tool, who deposits giant, untested PRs on their coworkers—or open source maintainers—and expects the “code review” process to handle the rest.

[... 840 words]AoAH Day 15: Porting a complete HTML5 parser and browser test suite (via) Anil Madhavapeddy is running an Advent of Agentic Humps this year, building a new useful OCaml library every day for most of December.

Inspired by Emil Stenström's JustHTML and my own coding agent port of that to JavaScript he coined the term vibespiling for AI-powered porting and transpiling of code from one language to another and had a go at building an HTML5 parser in OCaml, resulting in html5rw which passes the same html5lib-tests suite that Emil and myself used for our projects.

Anil's thoughts on the copyright and ethical aspects of this are worth quoting in full:

The question of copyright and licensing is difficult. I definitely did some editing by hand, and a fair bit of prompting that resulted in targeted code edits, but the vast amount of architectural logic came from JustHTML. So I opted to make the LICENSE a joint one with Emil Stenström. I did not follow the transitive dependency through to the Rust one, which I probably should.

I'm also extremely uncertain about every releasing this library to the central opam repository, especially as there are excellent HTML5 parsers already available. I haven't checked if those pass the HTML5 test suite, because this is wandering into the agents vs humans territory that I ruled out in my groundrules. Whether or not this agentic code is better or not is a moot point if releasing it drives away the human maintainers who are the source of creativity in the code!

I decided to credit Emil in the same way for my own vibespiled project.

Gemini 3 Flash

It continues to be a busy December, if not quite as busy as last year. Today’s big news is Gemini 3 Flash, the latest in Google’s “Flash” line of faster and less expensive models.

[... 1,271 words]