1,229 posts tagged “python”

The Python programming language.

2025

TIL: Exception.add_note

(via)

Neat tip from Danny Roy Greenfeld: Python 3.11 added a .add_note(message: str) method to the BaseException class, which means you can add one or more extra notes to any Python exception and they'll be displayed in the stacktrace!

Here's PEP 678 – Enriching Exceptions with Notes by Zac Hatfield-Dodds proposing the new feature back in 2021.

Textual v4.0.0: The Streaming Release. Will McGugan may no longer be running a commercial company around Textual, but that hasn't stopped his progress on the open source project.

He recently released v4 of his Python framework for building TUI command-line apps, and the signature feature is streaming Markdown support - super relevant in our current age of LLMs, most of which default to outputting a stream of Markdown via their APIs.

I took an example from one of his tests, spliced in my async LLM Python library and got some help from o3 to turn it into a streaming script for talking to models, which can be run like this:

uv run http://tools.simonwillison.net/python/streaming_textual_markdown.py \

'Markdown headers and tables comparing pelicans and wolves' \

-m gpt-4.1-mini

Every day someone becomes a programmer because they figured out how to make ChatGPT build something. Lucky for us: in many of those cases the AI picks Python. We should treat this as an opportunity and anticipate an expansion in the kinds of people who might want to attend a Python conference. Yet many of these new programmers are not even aware that programming communities and conferences exist. It’s in the Python community’s interest to find ways to pull them in.

Reflections on OpenAI (via) Calvin French-Owen spent just over a year working at OpenAI, during which time the organization grew from 1,000 to 3,000 people and Calvin found himself in "the top 30% by tenure".

His reflections on leaving are fascinating - absolutely crammed with detail about OpenAI's internal culture that I haven't seen described anywhere else before.

I think of OpenAI as an organization that started like Los Alamos. It was a group of scientists and tinkerers investigating the cutting edge of science. That group happened to accidentally spawn the most viral consumer app in history. And then grew to have ambitions to sell to governments and enterprises.

There's a lot in here, and it's worth spending time with the whole thing. A few points that stood out to me below.

Firstly, OpenAI are a Python shop who lean a whole lot on Pydantic and FastAPI:

OpenAI uses a giant monorepo which is ~mostly Python (though there is a growing set of Rust services and a handful of Golang services sprinkled in for things like network proxies). This creates a lot of strange-looking code because there are so many ways you can write Python. You will encounter both libraries designed for scale from 10y Google veterans as well as throwaway Jupyter notebooks newly-minted PhDs. Pretty much everything operates around FastAPI to create APIs and Pydantic for validation. But there aren't style guides enforced writ-large.

ChatGPT's success has influenced everything that they build, even at a technical level:

Chat runs really deep. Since ChatGPT took off, a lot of the codebase is structured around the idea of chat messages and conversations. These primitives are so baked at this point, you should probably ignore them at your own peril.

Here's a rare peek at how improvements to large models get discovered and incorporated into training runs:

How large models are trained (at a high-level). There's a spectrum from "experimentation" to "engineering". Most ideas start out as small-scale experiments. If the results look promising, they then get incorporated into a bigger run. Experimentation is as much about tweaking the core algorithms as it is tweaking the data mix and carefully studying the results. On the large end, doing a big run almost looks like giant distributed systems engineering. There will be weird edge cases and things you didn't expect.

Happy 20th birthday Django! Here’s my talk on Django Origins from Django’s 10th

Today is the 20th anniversary of the first commit to the public Django repository!

[... 8,994 words]If you're running low on disk space and are a uv user, don't forget about uv cache prune:

uv cache pruneremoves all unused cache entries. For example, the cache directory may contain entries created in previous uv versions that are no longer necessary and can be safely removed.uv cache pruneis safe to run periodically, to keep the cache directory clean.

My Mac just ran out of space. I ran OmniDiskSweeper and noticed that the ~/.cache/uv directory was 63.4GB - so I ran this:

uv cache prune

Pruning cache at: /Users/simon/.cache/uv

Removed 1156394 files (37.3GiB)

And now my computer can breathe again!

awwaiid/gremllm (via) Delightfully cursed Python library by Brock Wilcox, built on top of LLM:

from gremllm import Gremllm counter = Gremllm("counter") counter.value = 5 counter.increment() print(counter.value) # 6? print(counter.to_roman_numerals()) # VI?

You tell your Gremllm what it should be in the constructor, then it uses an LLM to hallucinate method implementations based on the method name every time you call them!

This utility class can be used for a variety of purposes. Uhm. Also please don't use this and if you do please tell me because WOW. Or maybe don't tell me. Or do.

Here's the system prompt, which starts:

You are a helpful AI assistant living inside a Python object called '{self._identity}'.

Someone is interacting with you and you need to respond by generating Python code that will be eval'd in your context.

You have access to 'self' (the object) and can modify self._context to store data.

Tip: Use keyword-only arguments in Python dataclasses

(via)

Useful tip from Christian Hammond: if you create a Python dataclass using @dataclass(kw_only=True) its constructor will require keyword arguments, making it easier to add additional properties in the future, including in subclasses, without risking breaking existing code.

My First Open Source AI Generated Library (via) Armin Ronacher had Claude and Claude Code do almost all of the work in building, testing, packaging and publishing a new Python library based on his design:

- It wrote ~1100 lines of code for the parser

- It wrote ~1000 lines of tests

- It configured the entire Python package, CI, PyPI publishing

- Generated a README, drafted a changelog, designed a logo, made it theme-aware

- Did multiple refactorings to make me happier

The project? sloppy-xml-py, a lax XML parser (and violation of everything the XML Working Group hold sacred) which ironically is necessary because LLMs themselves frequently output "XML" that includes validation errors.

Claude's SVG logo design is actually pretty decent, turns out it can draw more than just bad pelicans!

I think experiments like this are a really valuable way to explore the capabilities of these models. Armin's conclusion:

This was an experiment to see how far I could get with minimal manual effort, and to unstick myself from an annoying blocker. The result is good enough for my immediate use case and I also felt good enough to publish it to PyPI in case someone else has the same problem.

Treat it as a curious side project which says more about what's possible today than what's necessarily advisable.

I'd like to present a slightly different conclusion here. The most interesting thing about this project is that the code is good.

My criteria for good code these days is the following:

- Solves a defined problem, well enough that I'm not tempted to solve it in a different way

- Uses minimal dependencies

- Clear and easy to understand

- Well tested, with tests prove that the code does what it's meant to do

- Comprehensive documentation

- Packaged and published in a way that makes it convenient for me to use

- Designed to be easy to maintain and make changes in the future

sloppy-xml-py fits all of those criteria. It's useful, well defined, the code is readable with just about the right level of comments, everything is tested, the documentation explains everything I need to know, and it's been shipped to PyPI.

I'd be proud to have written this myself.

This example is not an argument for replacing programmers with LLMs. The code is good because Armin is an expert programmer who stayed in full control throughout the process. As I wrote the other day, a skilled individual with both deep domain understanding and deep understanding of the capabilities of the agent.

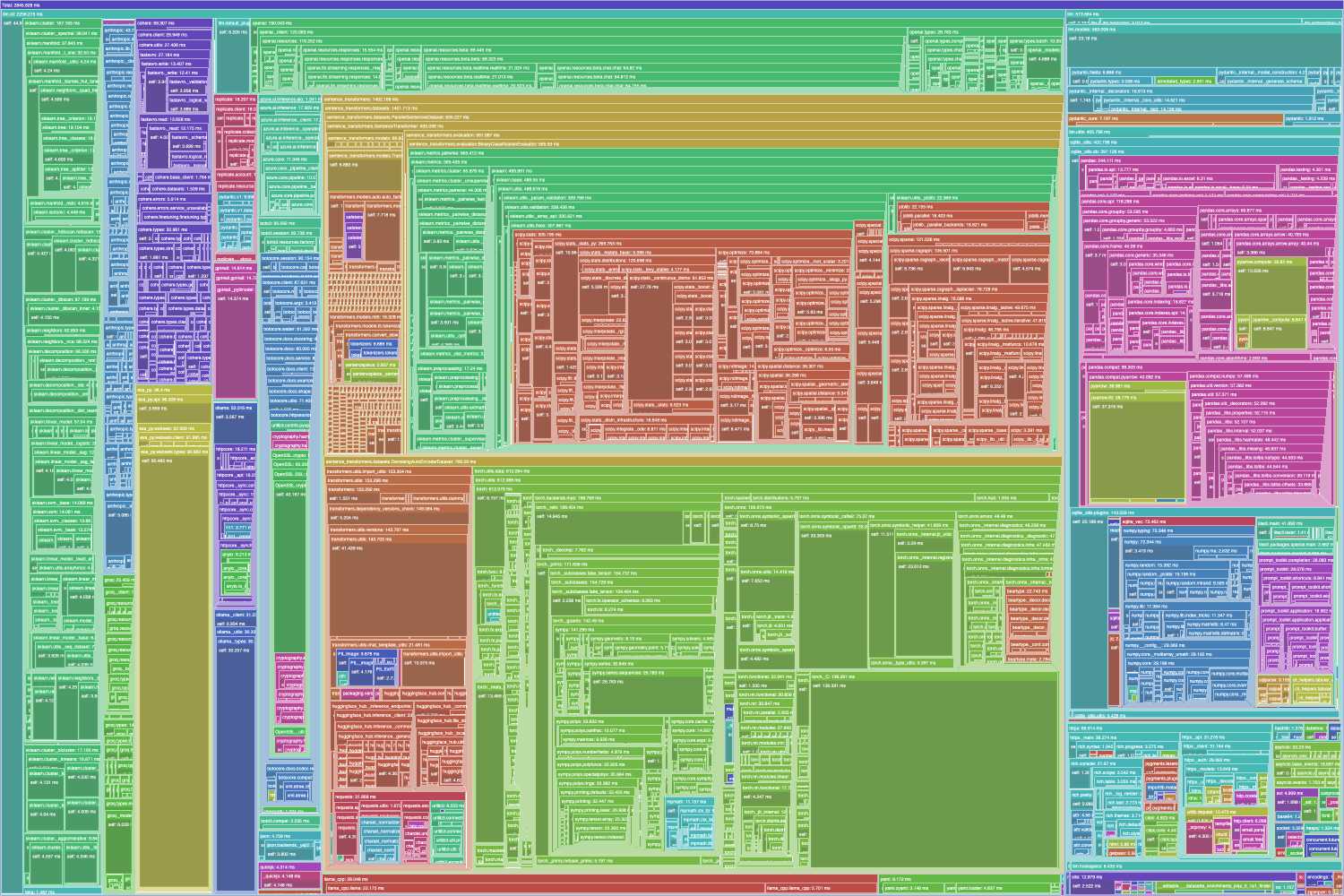

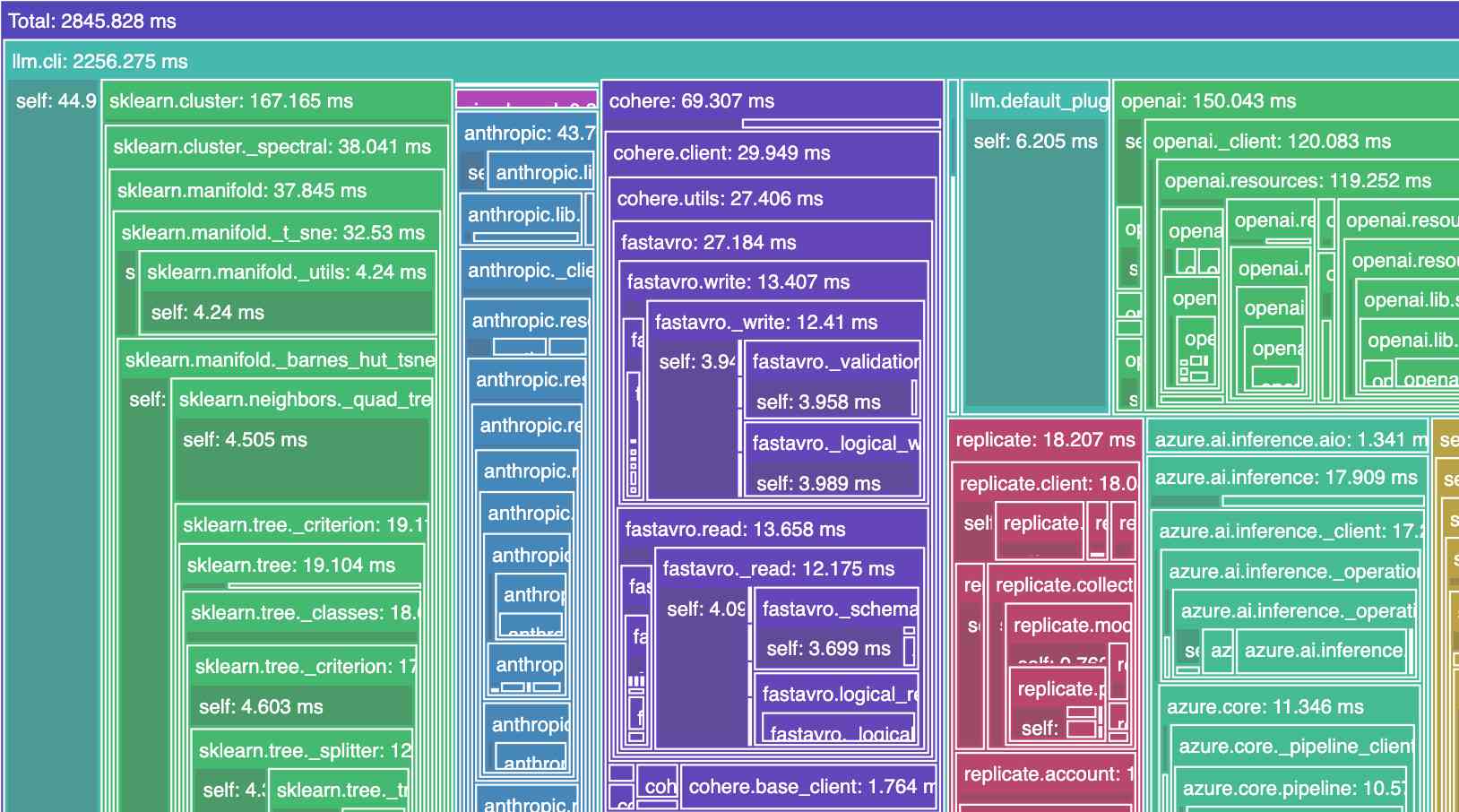

python-importtime-graph.

I was exploring why a Python tool was taking over a second to start running and I learned about the python -X importtime feature, documented here. Adding that option causes Python to spit out a text tree showing the time spent importing every module.

I tried that like this:

python -X importtime -m llm plugins

That's for LLM running 41 different plugins. Here's the full output from that command, which starts like this:

import time: self [us] | cumulative | imported package

import time: 77 | 77 | _io

import time: 19 | 19 | marshal

import time: 131 | 131 | posix

import time: 363 | 590 | _frozen_importlib_external

import time: 450 | 450 | time

import time: 110 | 559 | zipimport

import time: 64 | 64 | _codecs

import time: 252 | 315 | codecs

import time: 277 | 277 | encodings.aliases

Kevin Michel built this excellent tool for visualizing these traces as a treemap. It runs in a browser - visit kmichel.github.io/python-importtime-graph/ and paste in the trace to get the visualization.

Here's what I got for that LLM example trace:

As you can see, it's pretty dense! Here's the SVG version which is a lot more readable, since you can zoom in to individual sections.

Zooming in it looks like this:

I counted all of the yurts in Mongolia using machine learning (via) Fascinating, detailed account by Monroe Clinton of a geospatial machine learning project. Monroe wanted to count visible yurts in Mongolia using Google Maps satellite view. The resulting project incorporates mercantile for tile calculations, Label Studio for help label the first 10,000 examples, a model trained on top of YOLO11 and a bunch of clever custom Python code to co-ordinate a brute force search across 120 CPU workers running the model.

The Steering Council (SC) approves PEP 779 [Criteria for supported status for free-threaded Python], with the effect of removing the “experimental” tag from the free-threaded build of Python 3.14 [...]

With these recommendations and the acceptance of this PEP, we as the Python developer community should broadly advertise that free-threading is a supported Python build option now and into the future, and that it will not be removed without following a proper deprecation schedule. [...]

Keep in mind that any decision to transition to Phase III, with free-threading as the default or sole build of Python is still undecided, and dependent on many factors both within CPython itself and the community. We leave that decision for the future.

— Donghee Na, discuss.python.org

llm-mistral 0.14. I added tool-support to my plugin for accessing the Mistral API from LLM today, plus support for Mistral's new Codestral Embed embedding model.

An interesting challenge here is that I'm not using an official client library for llm-mistral - I rolled my own client on top of their streaming HTTP API using Florimond Manca's httpx-sse library. It's a very pleasant way to interact with streaming APIs - here's my code that does most of the work.

The problem I faced is that Mistral's API documentation for function calling has examples in Python and TypeScript but doesn't include curl or direct documentation of their HTTP endpoints!

I needed documentation at the HTTP level. Could I maybe extract that directly from Mistral's official Python library?

It turns out I could. I started by cloning the repo:

git clone https://github.com/mistralai/client-python

cd client-python/src/mistralai

files-to-prompt . | ttokMy ttok tool gave me a token count of 212,410 (counted using OpenAI's tokenizer, but that's normally a close enough estimate) - Mistral's models tap out at 128,000 so I switched to Gemini 2.5 Flash which can easily handle that many.

I ran this:

files-to-prompt -c . > /tmp/mistral.txt

llm -f /tmp/mistral.txt \

-m gemini-2.5-flash-preview-05-20 \

-s 'Generate comprehensive HTTP API documentation showing

how function calling works, include example curl commands for each step'The results were pretty spectacular! Gemini 2.5 Flash produced a detailed description of the exact set of HTTP APIs I needed to interact with, and the JSON formats I should pass to them.

There are a bunch of steps needed to get tools working in a new model, as described in the LLM plugin authors documentation. I started working through them by hand... and then got lazy and decided to see if I could get a model to do the work for me.

This time I tried the new Claude Opus 4. I fed it three files: my existing, incomplete llm_mistral.py, a full copy of llm_gemini.py with its working tools implementation and a copy of the API docs Gemini had written for me earlier. I prompted:

I need to update this Mistral code to add tool support. I've included examples of that code for Gemini, and a detailed README explaining the Mistral format.

Claude churned away and wrote me code that was most of what I needed. I tested it in a bunch of different scenarios, pasted problems back into Claude to see what would happen, and eventually took over and finished the rest of the code myself. Here's the full transcript.

I'm a little sad I didn't use Mistral to write the code to support Mistral, but I'm pleased to add yet another model family to the list that's supported for tool usage in LLM.

Build AI agents with the Mistral Agents API. Big upgrade to Mistral's API this morning: they've announced a new "Agents API". Mistral have been using the term "agents" for a while now. Here's how they describe them:

AI agents are autonomous systems powered by large language models (LLMs) that, given high-level instructions, can plan, use tools, carry out steps of processing, and take actions to achieve specific goals.

What that actually means is a system prompt plus a bundle of tools running in a loop.

Their new API looks similar to OpenAI's Responses API (March 2025), in that it now manages conversation state server-side for you, allowing you to send new messages to a thread without having to maintain that local conversation history yourself and transfer it every time.

Mistral's announcement captures the essential features that all of the LLM vendors have started to converge on for these "agentic" systems:

- Code execution, using Mistral's new Code Interpreter mechanism. It's Python in a server-side sandbox - OpenAI have had this for years and Anthropic launched theirs last week.

- Image generation - Mistral are using Black Forest Lab FLUX1.1 [pro] Ultra.

- Web search - this is an interesting variant, Mistral offer two versions:

web_searchis classic search, butweb_search_premium"enables access to both a search engine and two news agencies: AFP and AP". Mistral don't mention which underlying search engine they use but Brave is the only search vendor listed in the subprocessors on their Trust Center so I'm assuming it's Brave Search. I wonder if that news agency integration is handled by Brave or Mistral themselves? - Document library is Mistral's version of hosted RAG over "user-uploaded documents". Their documentation doesn't mention if it's vector-based or FTS or which embedding model it uses, which is a disappointing omission.

- Model Context Protocol support: you can now include details of MCP servers in your API calls and Mistral will call them when it needs to. It's pretty amazing to see the same new feature roll out across OpenAI (May 21st), Anthropic (May 22nd) and now Mistral (May 27th) within eight days of each other!

They also implement "agent handoffs":

Once agents are created, define which agents can hand off tasks to others. For example, a finance agent might delegate tasks to a web search agent or a calculator agent based on the conversation's needs.

Handoffs enable a seamless chain of actions. A single request can trigger tasks across multiple agents, each handling specific parts of the request.

This pattern always sounds impressive on paper but I'm yet to be convinced that it's worth using frequently. OpenAI have a similar mechanism in their OpenAI Agents SDK.

2025 Python Packaging Ecosystem Survey. If you make use of Python packaging tools (pip, Anaconda, uv, dozens of others) and have opinions please spend a few minutes with this year's packaging survey. This one was "Co-authored by 30+ of your favorite Python Ecosystem projects, organizations and companies."

django-simple-deploy. Eric Matthes presented a lightning talk about this project at PyCon US this morning. "Django has a deploy command now". You can run it like this:

pip install django-simple-deploy[fly_io]

# Add django_simple_deploy to INSTALLED_APPS.

python manage.py deploy --automate-all

It's plugin-based (inspired by Datasette!) and the project has stable plugins for three hosting platforms: dsd-flyio, dsd-heroku and dsd-platformsh.

Currently in development: dsd-vps - a plugin that should work with any VPS provider, using Paramiko to connect to a newly created instance and run all of the commands needed to start serving a Django application.

Today I learned - from a very short "we're sponsoring Python" sponsor blurb by Meta during the opening PyCon US welcome talks - that Python is now "the most-used language at Meta" - if you consider all of the different functional areas spread across the company.

They also have "over 3,000 Python developers working in the language every day".

The live captions for the event are once again provided by the excellent White Coat Captioning - real human beings! This got a cheer when it was pointed out by the conference chair a few moments earlier.

Building, launching, and scaling ChatGPT Images (via) Gergely Orosz landed a fantastic deep dive interview with OpenAI's Sulman Choudhry (head of engineering, ChatGPT) and Srinivas Narayanan (VP of engineering, OpenAI) to talk about the launch back in March of ChatGPT images - their new image generation mode built on top of multi-modal GPT-4o.

The feature kept on having new viral spikes, including one that added one million new users in a single hour. They signed up 100 million new users in the first week after the feature's launch.

When this vertical growth spike started, most of our engineering teams didn't believe it. They assumed there must be something wrong with the metrics.

Under the hood the infrastructure is mostly Python and FastAPI! I hope they're sponsoring those projects (and Starlette, which is used by FastAPI under the hood.)

They're also using some C, and Temporal as a workflow engine. They addressed the early scaling challenge by adding an asynchronous queue to defer the load for their free users (resulting in longer generation times) at peak demand.

There are plenty more details tucked away behind the firewall, including an exclusive I've not been able to find anywhere else: OpenAI's core engineering principles.

- Ship relentlessly - move quickly and continuously improve, without waiting for perfect conditions

- Own the outcome - take full responsibility for products, end-to-end

- Follow through - finish what is started and ensure the work lands fully

I tried getting o4-mini-high to track down a copy of those principles online and was delighted to see it either leak or hallucinate the URL to OpenAI's internal engineering handbook!

Gergely has a whole series of posts like this called Real World Engineering Challenges, including another one on ChatGPT a year ago.

TIL: SQLite triggers. I've been doing some work with SQLite triggers recently while working on sqlite-chronicle, and I decided I needed a single reference to exactly which triggers are executed for which SQLite actions and what data is available within those triggers.

I wrote this triggers.py script to output as much information about triggers as possible, then wired it into a TIL article using Cog. The Cog-powered source code for the TIL article can be seen here.

astral-sh/ty (via) Astral have been working on this "extremely fast Python type checker and language server, written in Rust" quietly but in-the-open for a while now. Here's the first alpha public release - albeit not yet announced - as ty on PyPI (nice donated two-letter name!)

You can try it out via uvx like this - run the command in a folder full of Python code and see what comes back:

uvx ty check

I got zero errors for my recent, simple condense-json library and a ton of errors for my more mature sqlite-utils library - output here.

It really is fast:

cd /tmp

git clone https://github.com/simonw/sqlite-utils

cd sqlite-utils

time uvx ty check

Reports it running in around a tenth of a second (0.109 total wall time) using multiple CPU cores:

uvx ty check 0.18s user 0.07s system 228% cpu 0.109 total

Running time uvx mypy . in the same folder (both after first ensuring the underlying tools had been cached) took around 7x longer:

uvx mypy . 0.46s user 0.09s system 74% cpu 0.740 total

This isn't a fair comparison yet as ty still isn't feature complete in comparison to mypy.

Making PyPI’s test suite 81% faster (via) Fantastic collection of tips from Alexis Challande on speeding up a Python CI workflow.

I've used pytest-xdist to run tests in parallel (across multiple cores) before, but the following tips were new to me:

COVERAGE_CORE=sysmon pytest --cov=myprojecttells coverage.py on Python 3.12 and higher to use the new sys.monitoring mechanism, which knocked their test execution time down from 58s to 27s.- Setting

testpaths = ["tests/"]inpytest.iniletspytestskip scanning other folders when trying to find tests. python -X importtime ...shows a trace of exactly how long every package took to import. I could have done with this last week when I was trying to debug slow LLM startup time which turned out to be caused be heavy imports.

MCP Run Python (via) Pydantic AI's MCP server for running LLM-generated Python code in a sandbox. They ended up using a trick I explored two years ago: using a Deno process to run Pyodide in a WebAssembly sandbox.

Here's a bit of a wild trick: since Deno loads code on-demand from JSR, and uv run can install Python dependencies on demand via the --with option... here's a one-liner you can paste into a macOS shell (provided you have Deno and uv installed already) which will run the example from their README - calculating the number of days between two dates in the most complex way imaginable:

ANTHROPIC_API_KEY="sk-ant-..." \ uv run --with pydantic-ai python -c ' import asyncio from pydantic_ai import Agent from pydantic_ai.mcp import MCPServerStdio server = MCPServerStdio( "deno", args=[ "run", "-N", "-R=node_modules", "-W=node_modules", "--node-modules-dir=auto", "jsr:@pydantic/mcp-run-python", "stdio", ], ) agent = Agent("claude-3-5-haiku-latest", mcp_servers=[server]) async def main(): async with agent.run_mcp_servers(): result = await agent.run("How many days between 2000-01-01 and 2025-03-18?") print(result.output) asyncio.run(main())'

I ran that just now and got:

The number of days between January 1st, 2000 and March 18th, 2025 is 9,208 days.

I thoroughly enjoy how tools like uv and Deno enable throwing together shell one-liner demos like this one.

Here's an extended version of this example which adds pretty-printed logging of the messages exchanged with the LLM to illustrate exactly what happened. The most important piece is this tool call where Claude 3.5 Haiku asks for Python code to be executed my the MCP server:

ToolCallPart( tool_name='run_python_code', args={ 'python_code': ( 'from datetime import date\n' '\n' 'date1 = date(2000, 1, 1)\n' 'date2 = date(2025, 3, 18)\n' '\n' 'days_between = (date2 - date1).days\n' 'print(f"Number of days between {date1} and {date2}: {days_between}")' ), }, tool_call_id='toolu_01TXXnQ5mC4ry42DrM1jPaza', part_kind='tool-call', )

I also managed to run it against Mistral Small 3.1 (15GB) running locally using Ollama (I had to add "Use your python tool" to the prompt to get it to work):

ollama pull mistral-small3.1:24b uv run --with devtools --with pydantic-ai python -c ' import asyncio from devtools import pprint from pydantic_ai import Agent, capture_run_messages from pydantic_ai.models.openai import OpenAIModel from pydantic_ai.providers.openai import OpenAIProvider from pydantic_ai.mcp import MCPServerStdio server = MCPServerStdio( "deno", args=[ "run", "-N", "-R=node_modules", "-W=node_modules", "--node-modules-dir=auto", "jsr:@pydantic/mcp-run-python", "stdio", ], ) agent = Agent( OpenAIModel( model_name="mistral-small3.1:latest", provider=OpenAIProvider(base_url="http://localhost:11434/v1"), ), mcp_servers=[server], ) async def main(): with capture_run_messages() as messages: async with agent.run_mcp_servers(): result = await agent.run("How many days between 2000-01-01 and 2025-03-18? Use your python tool.") pprint(messages) print(result.output) asyncio.run(main())'

Here's the full output including the debug logs.

Backticks are traditionally banned from use in future language features, due to the small symbol. No reader should need to distinguish

`from'at a glance.

— Steve Dower, CPython core developer, August 2024

CaMeL offers a promising new direction for mitigating prompt injection attacks

In the two and a half years that we’ve been talking about prompt injection attacks I’ve seen alarmingly little progress towards a robust solution. The new paper Defeating Prompt Injections by Design from Google DeepMind finally bucks that trend. This one is worth paying attention to.

[... 2,052 words]llm-docsmith (via) Matheus Pedroni released this neat plugin for LLM for adding docstrings to existing Python code. You can run it like this:

llm install llm-docsmith

llm docsmith ./scripts/main.py -o

The -o option previews the changes that will be made - without -o it edits the files directly.

It also accepts a -m claude-3.7-sonnet parameter for using an alternative model from the default (GPT-4o mini).

The implementation uses the Python libcst "Concrete Syntax Tree" package to manipulate the code, which means there's no chance of it making edits to anything other than the docstrings.

Here's the full system prompt it uses.

One neat trick is at the end of the system prompt it says:

You will receive a JSON template. Fill the slots marked with <SLOT> with the appropriate description. Return as JSON.

That template is actually provided JSON generated using these Pydantic classes:

class Argument(BaseModel): name: str description: str annotation: str | None = None default: str | None = None class Return(BaseModel): description: str annotation: str | None class Docstring(BaseModel): node_type: Literal["class", "function"] name: str docstring: str args: list[Argument] | None = None ret: Return | None = None class Documentation(BaseModel): entries: list[Docstring]

The code adds <SLOT> notes to that in various places, so the template included in the prompt ends up looking like this:

{

"entries": [

{

"node_type": "function",

"name": "create_docstring_node",

"docstring": "<SLOT>",

"args": [

{

"name": "docstring_text",

"description": "<SLOT>",

"annotation": "str",

"default": null

},

{

"name": "indent",

"description": "<SLOT>",

"annotation": "str",

"default": null

}

],

"ret": {

"description": "<SLOT>",

"annotation": "cst.BaseStatement"

}

}

]

}

Django: what’s new in 5.2. Adam Johnson provides extremely detailed unofficial annotated release notes for the latest Django.

I found his explanation and example of Form BoundField customization particularly useful - here's the new pattern for customizing the class= attribute on the label associated with a CharField:

from django import forms class WideLabelBoundField(forms.BoundField): def label_tag(self, contents=None, attrs=None, label_suffix=None): if attrs is None: attrs = {} attrs["class"] = "wide" return super().label_tag(contents, attrs, label_suffix) class NebulaForm(forms.Form): name = forms.CharField( max_length=100, label="Nebula Name", bound_field_class=WideLabelBoundField, )

I'd also missed the new HttpResponse.get_preferred_type() method for implementing HTTP content negotiation:

content_type = request.get_preferred_type( ["text/html", "application/json"] )

smartfunc. Vincent D. Warmerdam built this ingenious wrapper around my LLM Python library which lets you build LLM wrapper functions using a decorator and a docstring:

from smartfunc import backend @backend("gpt-4o") def generate_summary(text: str): """Generate a summary of the following text: {{ text }}""" pass summary = generate_summary(long_text)

It works with LLM plugins so the same pattern should work against Gemini, Claude and hundreds of others, including local models.

It integrates with more recent LLM features too, including async support and schemas, by introspecting the function signature:

class Summary(BaseModel): summary: str pros: list[str] cons: list[str] @async_backend("gpt-4o-mini") async def generate_poke_desc(text: str) -> Summary: "Describe the following pokemon: {{ text }}" pass pokemon = await generate_poke_desc("pikachu")

Vincent also recorded a 12 minute video walking through the implementation and showing how it uses Pydantic, Python's inspect module and typing.get_type_hints() function.

Composite primary keys in Django. Django 5.2 is out today and a big new feature is composite primary keys, which can now be defined like this:

class Release(models.Model): pk = models.CompositePrimaryKey( "version", "name" ) version = models.IntegerField() name = models.CharField(max_length=20)

They don't yet work with the Django admin or as targets for foreign keys.

Other smaller new features include:

- All ORM models are now automatically imported into

./manage.py shell- a feature borrowed from./manage.py shell_plusin django-extensions - Feeds from the Django syndication framework can now specify XSLT stylesheets

- response.text now returns the string representation of the body - I'm so happy about this, now I don't have to litter my Django tests with

response.content.decode("utf-8")any more - a new simple_block_tag helper making it much easier to create a custom Django template tag that further processes its own inner rendered content

- A bunch more in the full release notes

5.2 is also an LTS release, so it will receive security and data loss bug fixes up to April 2028.

Pydantic Evals (via) Brand new package from David Montague and the Pydantic AI team which directly tackles what I consider to be the single hardest problem in AI engineering: building evals to determine if your LLM-based system is working correctly and getting better over time.

The feature is described as "in beta" and comes with this very realistic warning:

Unlike unit tests, evals are an emerging art/science; anyone who claims to know for sure exactly how your evals should be defined can safely be ignored.

This code example from their documentation illustrates the relationship between the two key nouns - Cases and Datasets:

from pydantic_evals import Case, Dataset case1 = Case( name="simple_case", inputs="What is the capital of France?", expected_output="Paris", metadata={"difficulty": "easy"}, ) dataset = Dataset(cases=[case1])

The library also supports custom evaluators, including LLM-as-a-judge:

Case( name="vegetarian_recipe", inputs=CustomerOrder( dish_name="Spaghetti Bolognese", dietary_restriction="vegetarian" ), expected_output=None, metadata={"focus": "vegetarian"}, evaluators=( LLMJudge( rubric="Recipe should not contain meat or animal products", ), ), )

Cases and datasets can also be serialized to YAML.

My first impressions are that this looks like a solid implementation of a sensible design. I'm looking forward to trying it out against a real project.

debug-gym (via) New paper and code from Microsoft Research that experiments with giving LLMs access to the Python debugger. They found that the best models could indeed improve their results by running pdb as a tool.

They saw the best results overall from Claude 3.7 Sonnet against SWE-bench Lite, where it scored 37.2% in rewrite mode without a debugger, 48.4% with their debugger tool and 52.1% with debug(5) - a mechanism where the pdb tool is made available only after the 5th rewrite attempt.

Their code is available on GitHub. I found this implementation of the pdb tool, and tracked down the main system and user prompt in agents/debug_agent.py:

System prompt:

Your goal is to debug a Python program to make sure it can pass a set of test functions. You have access to the pdb debugger tools, you can use them to investigate the code, set breakpoints, and print necessary values to identify the bugs. Once you have gained enough information, propose a rewriting patch to fix the bugs. Avoid rewriting the entire code, focus on the bugs only.

User prompt (which they call an "action prompt"):

Based on the instruction, the current code, the last execution output, and the history information, continue your debugging process using pdb commands or to propose a patch using rewrite command. Output a single command, nothing else. Do not repeat your previous commands unless they can provide more information. You must be concise and avoid overthinking.