89 posts tagged “webassembly”

2026

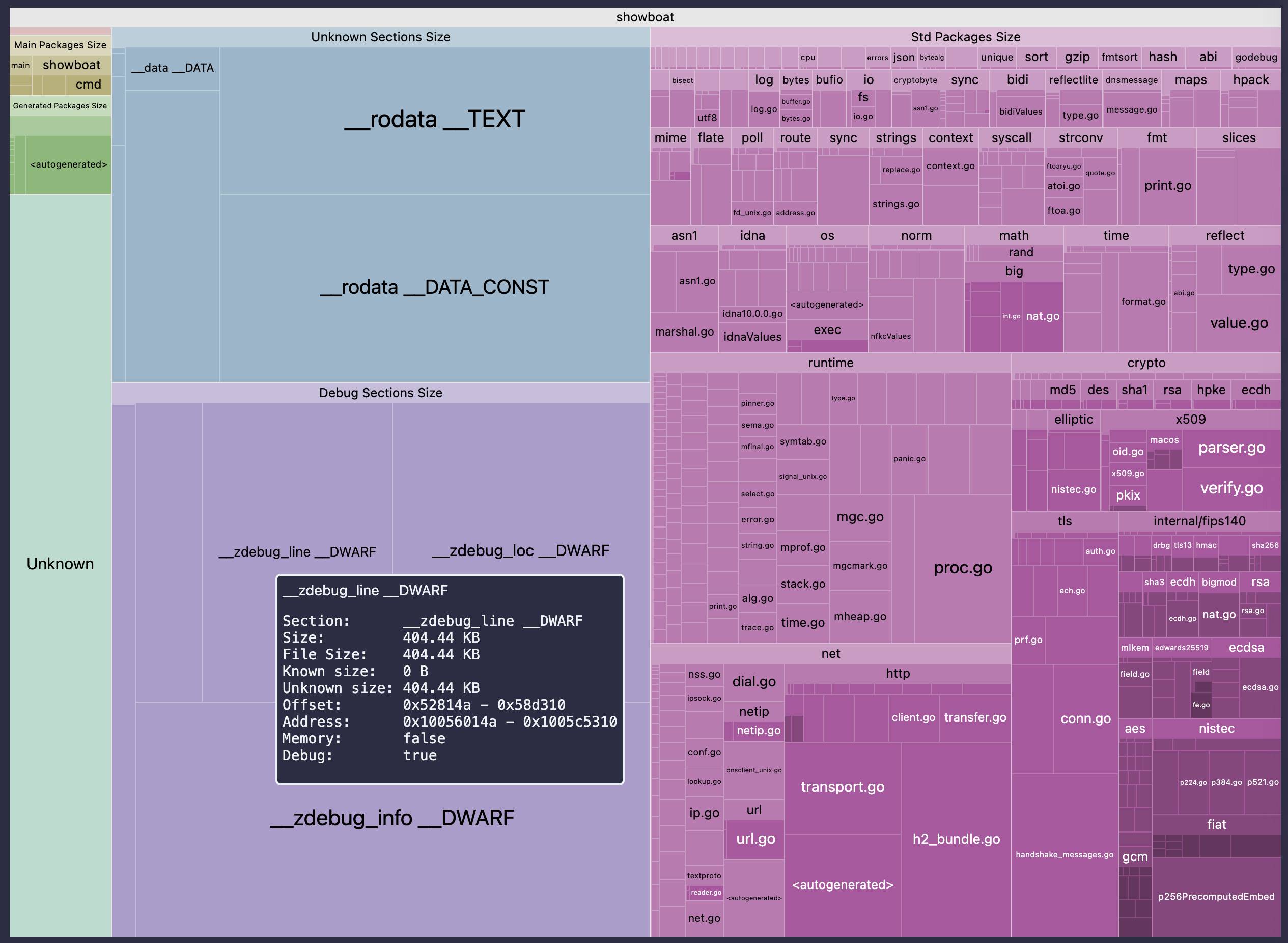

go-size-analyzer (via) The Go ecosystem is really good at tooling. I just learned about this tool for analyzing the size of Go binaries using a pleasing treemap view of their bundled dependencies.

You can install and run the tool locally, but it's also compiled to WebAssembly and hosted at gsa.zxilly.dev - which means you can open compiled Go binaries and analyze them directly in your browser.

I tried it with a 8.1MB macOS compiled copy of my Go Showboat tool and got this:

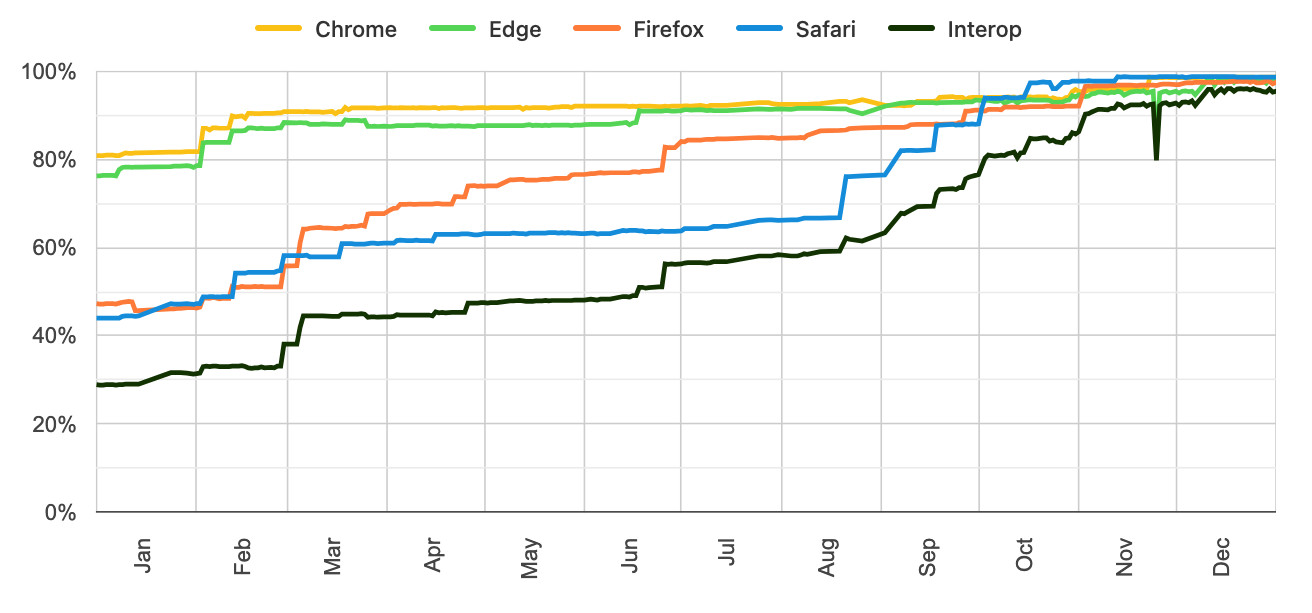

Launching Interop 2026. Jake Archibald reports on Interop 2026, the initiative between Apple, Google, Igalia, Microsoft, and Mozilla to collaborate on ensuring a targeted set of web platform features reach cross-browser parity over the course of the year.

I hadn't realized how influential and successful the Interop series has been. It started back in 2021 as Compat 2021 before being rebranded to Interop in 2022.

The dashboards for each year can be seen here, and they demonstrate how wildly effective the program has been: 2021, 2022, 2023, 2024, 2025, 2026.

Here's the progress chart for 2025, which shows every browser vendor racing towards a 95%+ score by the end of the year:

The feature I'm most excited about in 2026 is Cross-document View Transitions, building on the successful 2025 target of Same-Document View Transitions. This will provide fancy SPA-style transitions between pages on websites with no JavaScript at all.

As a keen WebAssembly tinkerer I'm also intrigued by this one:

JavaScript Promise Integration for Wasm allows WebAssembly to asynchronously 'suspend', waiting on the result of an external promise. This simplifies the compilation of languages like C/C++ which expect APIs to run synchronously.

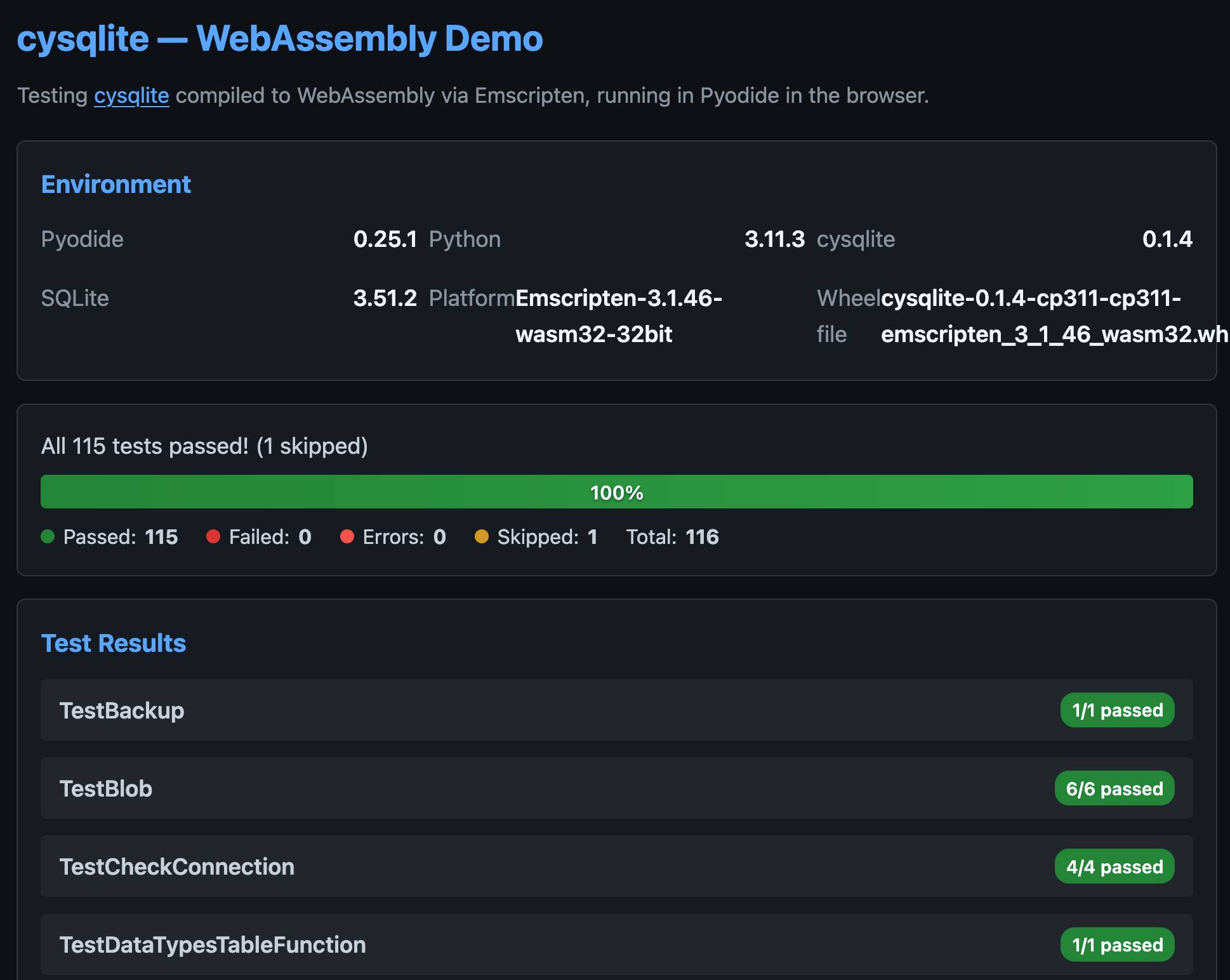

cysqlite—a new sqlite driver

(via)

Charles Leifer has been maintaining pysqlite3 - a fork of the Python standard library's sqlite3 module that makes it much easier to run upgraded SQLite versions - since 2018.

He's been working on a ground-up Cython rewrite called cysqlite for almost as long, but it's finally at a stage where it's ready for people to try out.

The biggest change from the sqlite3 module involves transactions. Charles explains his discomfort with the sqlite3 implementation at length - that library provides two different variants neither of which exactly match the autocommit mechanism in SQLite itself.

I'm particularly excited about the support for custom virtual tables, a feature I'd love to see in sqlite3 itself.

cysqlite provides a Python extension compiled from C, which means it normally wouldn't be available in Pyodide. I set Claude Code on it (here's the prompt) and it built me cysqlite-0.1.4-cp311-cp311-emscripten_3_1_46_wasm32.whl, a 688KB wheel file with a WASM build of the library that can be loaded into Pyodide like this:

import micropip await micropip.install( "https://simonw.github.io/research/cysqlite-wasm-wheel/cysqlite-0.1.4-cp311-cp311-emscripten_3_1_46_wasm32.whl" ) import cysqlite print(cysqlite.connect(":memory:").execute( "select sqlite_version()" ).fetchone())

(I also learned that wheels like this have to be built for the emscripten version used by that edition of Pyodide - my experimental wheel loads in Pyodide 0.25.1 but fails in 0.27.5 with a Wheel was built with Emscripten v3.1.46 but Pyodide was built with Emscripten v3.1.58 error.)

You can try my wheel in this new Pyodide REPL i had Claude build as a mobile-friendly alternative to Pyodide's own hosted console.

I also had Claude build this demo page that executes the original test suite in the browser and displays the results:

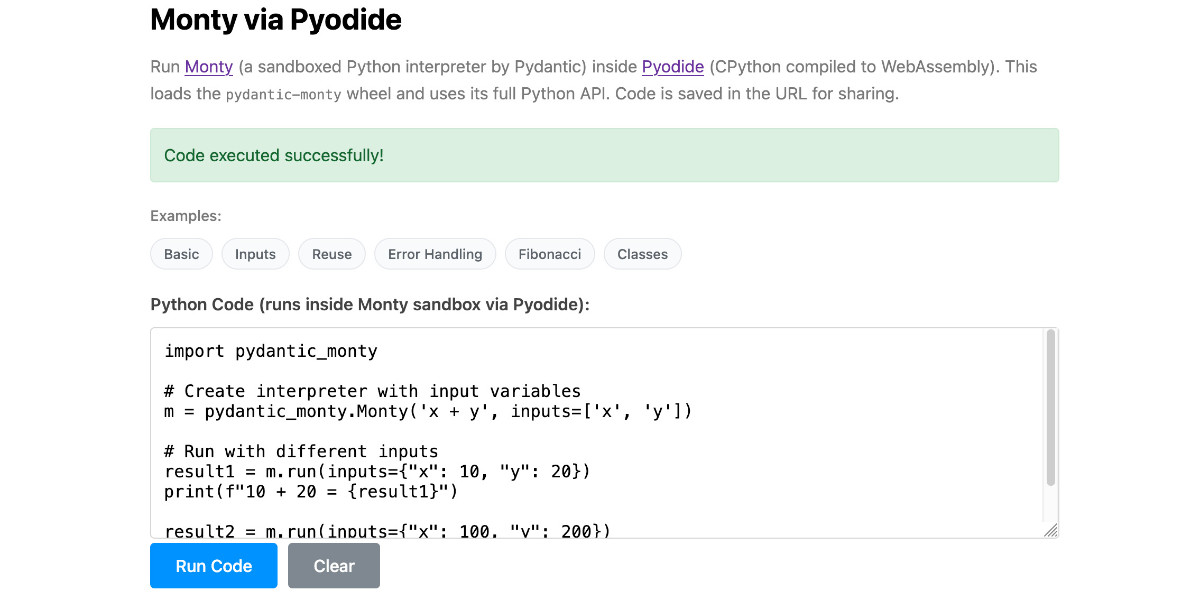

Running Pydantic’s Monty Rust sandboxed Python subset in WebAssembly

There’s a jargon-filled headline for you! Everyone’s building sandboxes for running untrusted code right now, and Pydantic’s latest attempt, Monty, provides a custom Python-like language (a subset of Python) in Rust and makes it available as both a Rust library and a Python package. I got it working in WebAssembly, providing a sandbox-in-a-sandbox.

[... 854 words]2025

MicroQuickJS. New project from programming legend Fabrice Bellard, of ffmpeg and QEMU and QuickJS and so much more fame:

MicroQuickJS (aka. MQuickJS) is a Javascript engine targetted at embedded systems. It compiles and runs Javascript programs with as low as 10 kB of RAM. The whole engine requires about 100 kB of ROM (ARM Thumb-2 code) including the C library. The speed is comparable to QuickJS.

It supports a subset of full JavaScript, though it looks like a rich and full-featured subset to me.

One of my ongoing interests is sandboxing: mechanisms for executing untrusted code - from end users or generated by LLMs - in an environment that restricts memory usage and applies a strict time limit and restricts file or network access. Could MicroQuickJS be useful in that context?

I fired up Claude Code for web (on my iPhone) and kicked off an asynchronous research project to see explore that question:

My full prompt is here. It started like this:

Clone https://github.com/bellard/mquickjs to /tmp

Investigate this code as the basis for a safe sandboxing environment for running untrusted code such that it cannot exhaust memory or CPU or access files or the network

First try building python bindings for this using FFI - write a script that builds these by checking out the code to /tmp and building against that, to avoid copying the C code in this repo permanently. Write and execute tests with pytest to exercise it as a sandbox

Then build a "real" Python extension not using FFI and experiment with that

Then try compiling the C to WebAssembly and exercising it via both node.js and Deno, with a similar suite of tests [...]

I later added to the interactive session:

Does it have a regex engine that might allow a resource exhaustion attack from an expensive regex?

(The answer was no - the regex engine calls the interrupt handler even during pathological expression backtracking, meaning that any configured time limit should still hold.)

Here's the full transcript and the final report.

Some key observations:

- MicroQuickJS is very well suited to the sandbox problem. It has robust near and time limits baked in, it doesn't expose any dangerous primitive like filesystem of network access and even has a regular expression engine that protects against exhaustion attacks (provided you configure a time limit).

- Claude span up and tested a Python library that calls a MicroQuickJS shared library (involving a little bit of extra C), a compiled a Python binding and a library that uses the original MicroQuickJS CLI tool. All of those approaches work well.

- Compiling to WebAssembly was a little harder. It got a version working in Node.js and Deno and Pyodide, but the Python libraries wasmer and wasmtime proved harder, apparently because "mquickjs uses setjmp/longjmp for error handling". It managed to get to a working wasmtime version with a gross hack.

I'm really excited about this. MicroQuickJS is tiny, full featured, looks robust and comes from excellent pedigree. I think this makes for a very solid new entrant in the quest for a robust sandbox.

Update: I had Claude Code build tools.simonwillison.net/microquickjs, an interactive web playground for trying out the WebAssembly build of MicroQuickJS, adapted from my previous QuickJS plaground. My QuickJS page loads 2.28 MB (675 KB transferred). The MicroQuickJS one loads 303 KB (120 KB transferred).

Here are the prompts I used for that.

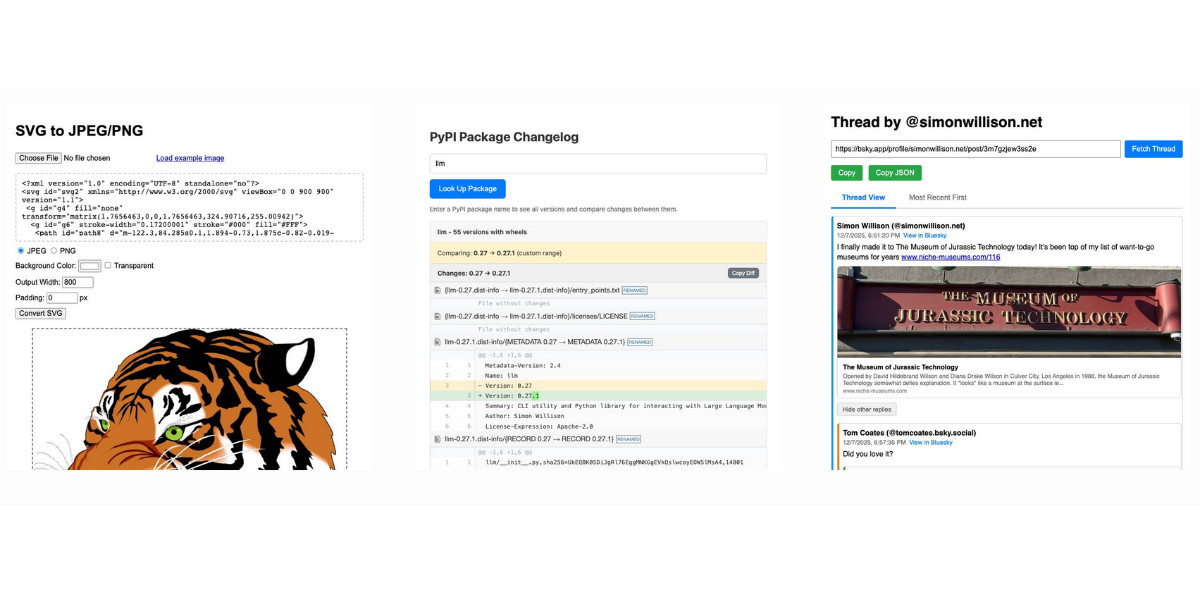

Useful patterns for building HTML tools

I’ve started using the term HTML tools to refer to HTML applications that I’ve been building which combine HTML, JavaScript, and CSS in a single file and use them to provide useful functionality. I have built over 150 of these in the past two years, almost all of them written by LLMs. This article presents a collection of useful patterns I’ve discovered along the way.

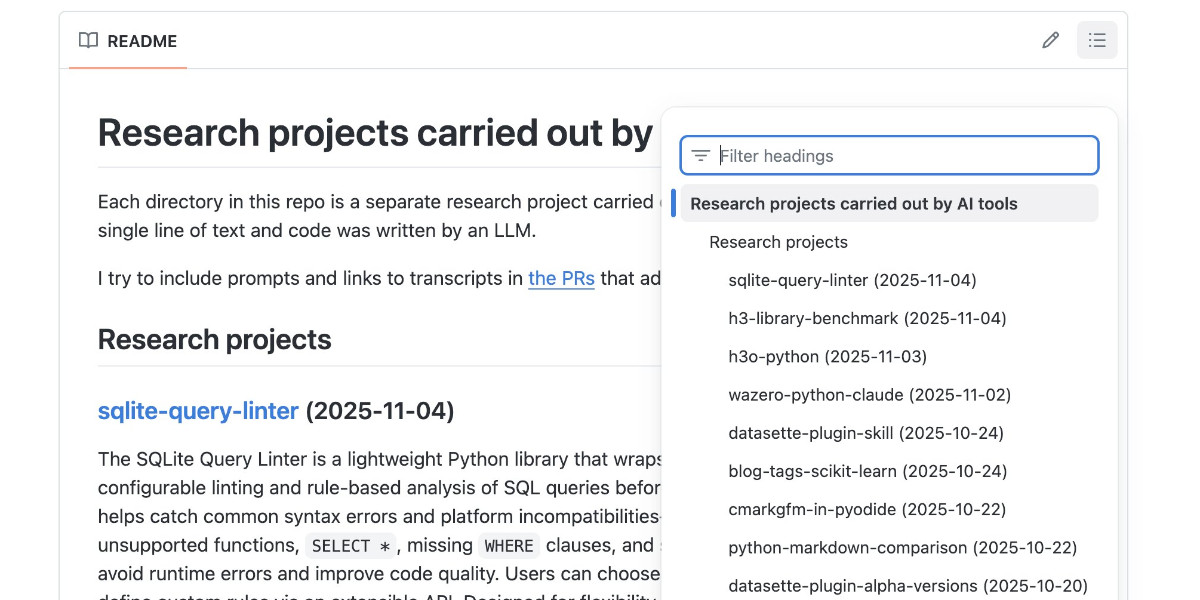

[... 4,231 words]Code research projects with async coding agents like Claude Code and Codex

I’ve been experimenting with a pattern for LLM usage recently that’s working out really well: asynchronous code research tasks. Pick a research question, spin up an asynchronous coding agent and let it go and run some experiments and report back when it’s done.

[... 2,017 words]Living dangerously with Claude

I gave a talk last night at Claude Code Anonymous in San Francisco, the unofficial meetup for coding agent enthusiasts. I decided to talk about a dichotomy I’ve been struggling with recently. On the one hand I’m getting enormous value from running coding agents with as few restrictions as possible. On the other hand I’m deeply concerned by the risks that accompany that freedom.

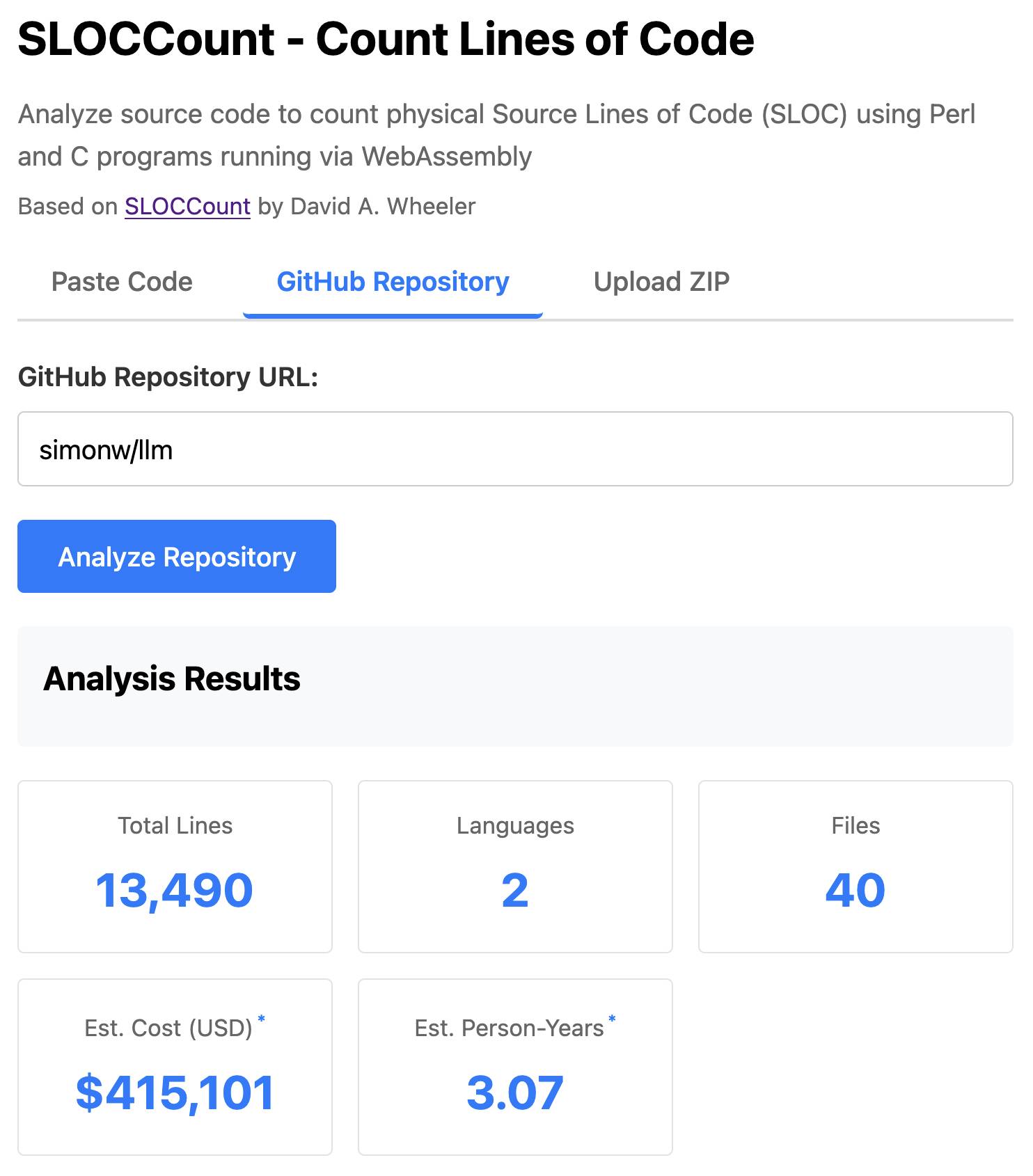

[... 2,208 words]SLOCCount in WebAssembly. This project/side-quest got a little bit out of hand.

I remembered an old tool called SLOCCount which could count lines of code and produce an estimate for how much they would cost to develop. I thought it would be fun to play around with it again, especially given how cheap it is to generate code using LLMs these days.

Here's the homepage for SLOCCount by David A. Wheeler. It dates back to 2001!

I figured it might be fun to try and get it running on the web. Surely someone had compiled Perl to WebAssembly...?

WebPerl by Hauke Dämpfling is exactly that, even adding a neat <script type="text/perl"> tag.

I told Claude Code for web on my iPhone to figure it out and build something, giving it some hints from my initial research:

Build sloccount.html - a mobile friendly UI for running the Perl sloccount tool against pasted code or against a GitHub repository that is provided in a form field

It works using the webperl webassembly build of Perl, plus it loads Perl code from this exact commit of this GitHub repository https://github.com/licquia/sloccount/tree/7220ff627334a8f646617fe0fa542d401fb5287e - I guess via the GitHub API, maybe using the https://github.com/licquia/sloccount/archive/7220ff627334a8f646617fe0fa542d401fb5287e.zip URL if that works via CORS

Test it with playwright Python - don’t edit any file other than sloccount.html and a tests/test_sloccount.py file

Since I was working on my phone I didn't review the results at all. It seemed to work so I deployed it to static hosting... and then when I went to look at it properly later on found that Claude had given up, cheated and reimplemented it in JavaScript instead!

So I switched to Claude Code on my laptop where I have more control and coached Claude through implementing the project for real. This took way longer than the project deserved - probably a solid hour of my active time, spread out across the morning.

I've shared some of the transcripts - one, two, and three - as terminal sessions rendered to HTML using my rtf-to-html tool.

At one point I realized that the original SLOCCount project wasn't even entirely Perl as I had assumed, it included several C utilities! So I had Claude Code figure out how to compile those to WebAssembly (it used Emscripten) and incorporate those into the project (with notes on what it did.)

The end result (source code here) is actually pretty cool. It's a web UI with three tabs - one for pasting in code, a second for loading code from a GitHub repository and a third that lets you open a Zip file full of code that you want to analyze. Here's an animated demo:

The cost estimates it produces are of very little value. By default it uses the original method from 2001. You can also twiddle the factors - bumping up the expected US software engineer's annual salary from its 2000 estimate of $56,286 is a good start!

I had ChatGPT take a guess at what those figures should be for today and included those in the tool, with a very prominent warning not to trust them in the slightest.

Infinite Monkey (via) Mihai Parparita's Infinite Mac lets you run classic MacOS emulators directly in your browser. Infinite Monkey is a new feature which taps into the OpenAI Computer Use and Claude Computer Use APIs using your own API keys and uses them to remote control the emulated Mac!

Here's what happened when I told OpenAI Computer Use to "Open MacPaint and draw a pelican riding a bicycle" - video sped up 3x.

If you want to create completely free software for other people to use, the absolute best delivery mechanism right now is static HTML and JavaScript served from a free web host with an established reputation.

Thanks to WebAssembly the set of potential software that can be served in this way is vast and, I think, under appreciated. Pyodide means we can ship client-side Python applications now!

This assumes that you would like your gift to the world to keep working for as long as possible, while granting you the freedom to lose interest and move onto other projects without needing to keep covering expenses far into the future.

Even the cheapest hosting plan requires you to monitor and update billing details every few years. Domains have to be renewed. Anything that runs server-side will inevitably need to be upgraded someday - and the longer you wait between upgrades the harder those become.

My top choice for this kind of thing in 2025 is GitHub, using GitHub Pages. It's free for public repositories and I haven't seen GitHub break a working URL that they have hosted in the 17+ years since they first launched.

A few years ago I'd have recommended Heroku on the basis that their free plan had stayed reliable for more than a decade, but Salesforce took that accumulated goodwill and incinerated it in 2022.

It almost goes without saying that you should release it under an open source license. The license alone is not enough to ensure regular human beings can make use of what you have built though: give people a link to something that works!

Abusing DuckDB-WASM by making SQL draw 3D graphics (Sort Of) (via) Brilliant hack by Patrick Trainer who got an ASCII-art Doom clone running in the browser using convoluted SQL queries running against the WebAssembly build of DuckDB. Here’s the live demo, and the code on GitHub.

The SQL is so much fun. Here’s a snippet that implements ray tracing as part of a SQL view:

CREATE OR REPLACE VIEW render_3d_frame AS WITH RECURSIVE -- ... rays AS ( SELECT c.col, (p.dir - s.fov/2.0 + s.fov * (c.col*1.0 / (s.view_w - 1))) AS angle FROM cols c, s, p ), raytrace(col, step_count, fx, fy, angle) AS ( SELECT r.col, 1, p.x + COS(r.angle)*s.step, p.y + SIN(r.angle)*s.step, r.angle FROM rays r, p, s UNION ALL SELECT rt.col, rt.step_count + 1, rt.fx + COS(rt.angle)*s.step, rt.fy + SIN(rt.angle)*s.step, rt.angle FROM raytrace rt, s WHERE rt.step_count < s.max_steps AND NOT EXISTS ( SELECT 1 FROM map m WHERE m.x = CAST(rt.fx AS INT) AND m.y = CAST(rt.fy AS INT) AND m.tile = '#' ) ), -- ...

TypeScript types can run DOOM (via) This YouTube video (with excellent production values - "conservatively 200 hours dropped into that 7 minute video") describes an outlandishly absurd project: Dimitri Mitropoulos spent a full year getting DOOM to run entirely via the TypeScript compiler (TSC).

Along the way, he implemented a full WASM virtual machine within the type system, including implementing the 116 WebAssembly instructions needed by DOOM, starting with integer arithmetic and incorporating memory management, dynamic dispatch and more, all running on top of binary two's complement numbers stored as string literals.

The end result was 177TB of data representing 3.5 trillion lines of type definitions. Rendering the first frame of DOOM took 12 days running at 20 million type instantiations per second.

Here's the source code for the WASM runtime. The code for Add, Divide and ShiftLeft/ShiftRight provide a neat example of quite how much complexity is involved in this project.

The thing that delights me most about this project is the sheer variety of topics you would need to fully absorb in order to pull it off - not just TypeScript but WebAssembly, virtual machine implementations, TSC internals and the architecture of DOOM itself.

files-to-prompt 0.5.

My files-to-prompt tool (originally built using Claude 3 Opus back in April) had been accumulating a bunch of issues and PRs - I finally got around to spending some time with it and pushed a fresh release:

- New

-n/--line-numbersflag for including line numbers in the output. Thanks, Dan Clayton. #38- Fix for utf-8 handling on Windows. Thanks, David Jarman. #36

--ignorepatterns are now matched against directory names as well as file names, unless you pass the new--ignore-files-onlyflag. Thanks, Nick Powell. #30

I use this tool myself on an almost daily basis - it's fantastic for quickly answering questions about code. Recently I've been plugging it into Gemini 2.0 with its 2 million token context length, running recipes like this one:

git clone https://github.com/bytecodealliance/componentize-py

cd componentize-py

files-to-prompt . -c | llm -m gemini-2.0-pro-exp-02-05 \

-s 'How does this work? Does it include a python compiler or AST trick of some sort?'

I ran that question against the bytecodealliance/componentize-py repo - which provides a tool for turning Python code into compiled WASM - and got this really useful answer.

Here's another example. I decided to have o3-mini review how Datasette handles concurrent SQLite connections from async Python code - so I ran this:

git clone https://github.com/simonw/datasette

cd datasette/datasette

files-to-prompt database.py utils/__init__.py -c | \

llm -m o3-mini -o reasoning_effort high \

-s 'Output in markdown a detailed analysis of how this code handles the challenge of running SQLite queries from a Python asyncio application. Explain how it works in the first section, then explore the pros and cons of this design. In a final section propose alternative mechanisms that might work better.'

Here's the result. It did an extremely good job of explaining how my code works - despite being fed just the Python and none of the other documentation. Then it made some solid recommendations for potential alternatives.

I added a couple of follow-up questions (using llm -c) which resulted in a full working prototype of an alternative threadpool mechanism, plus some benchmarks.

One final example: I decided to see if there were any undocumented features in Litestream, so I checked out the repo and ran a prompt against just the .go files in that project:

git clone https://github.com/benbjohnson/litestream

cd litestream

files-to-prompt . -e go -c | llm -m o3-mini \

-s 'Write extensive user documentation for this project in markdown'

Once again, o3-mini provided a really impressively detailed set of unofficial documentation derived purely from reading the source.

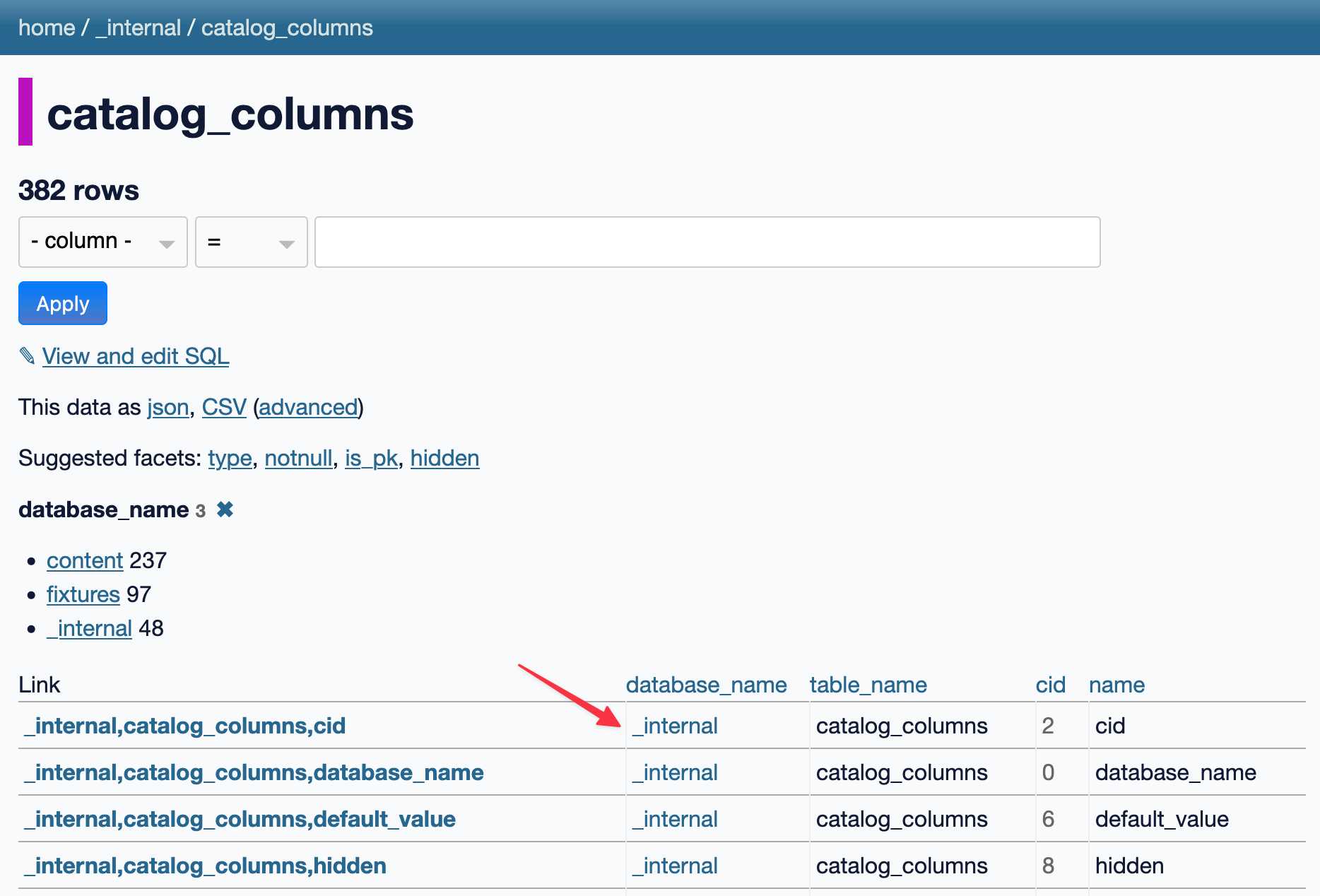

URL-addressable Pyodide Python environments

This evening I spotted an obscure bug in Datasette, using Datasette Lite. I figure it’s a good opportunity to highlight how useful it is to have a URL-addressable Python environment, powered by Pyodide and WebAssembly.

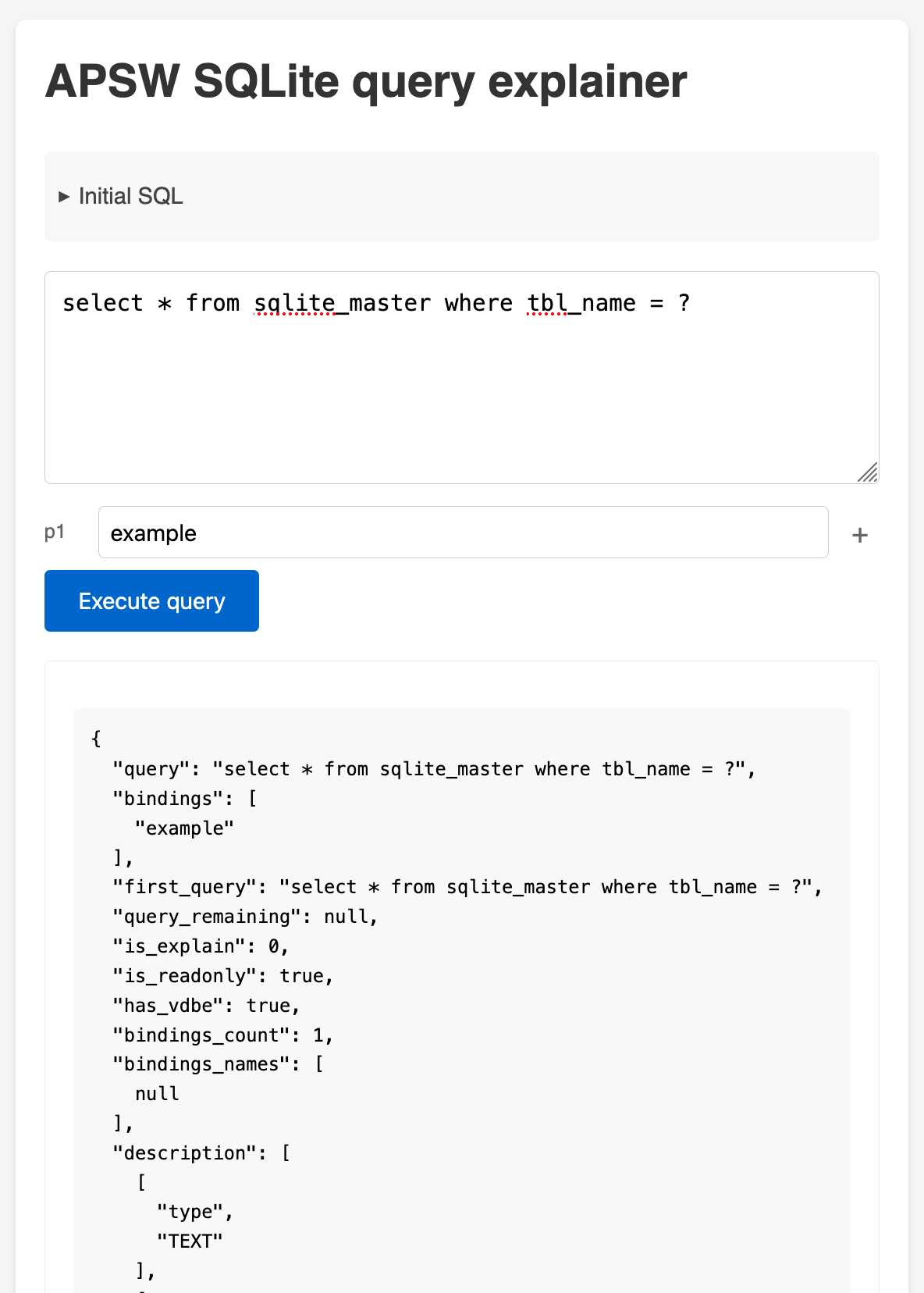

[... 1,905 words]APSW SQLite query explainer. Today I found out about APSW's (Another Python SQLite Wrapper, in constant development since 2004) apsw.ext.query_info() function, which takes a SQL query and returns a very detailed set of information about that query - all without executing it.

It actually solves a bunch of problems I've wanted to address in Datasette - like taking an arbitrary query and figuring out how many parameters (?) it takes and which tables and columns are represented in the result.

I tried it out in my console (uv run --with apsw python) and it seemed to work really well. Then I remembered that the Pyodide project includes WebAssembly builds of a number of Python C extensions and was delighted to find apsw on that list.

... so I got Claude to build me a web interface for trying out the function, using Pyodide to run a user's query in Python in their browser via WebAssembly.

Claude didn't quite get it in one shot - I had to feed it the URL to a more recent Pyodide and it got stuck in a bug loop which I fixed by pasting the code into a fresh session.

ggml : x2 speed for WASM by optimizing SIMD

(via)

PR by Xuan-Son Nguyen for llama.cpp:

This PR provides a big jump in speed for WASM by leveraging SIMD instructions for

qX_K_q8_KandqX_0_q8_0dot product functions.Surprisingly, 99% of the code in this PR is written by DeekSeek-R1. The only thing I do is to develop tests and write prompts (with some trails and errors)

They shared their prompts here, which they ran directly through R1 on chat.deepseek.com - it spent 3-5 minutes "thinking" about each prompt.

I've been seeing some very promising results from DeepSeek R1 for code as well. Here's a recent transcript where I used it to rewrite the llm_groq.py plugin to imitate the cached model JSON pattern used by llm_mistral.py, resulting in this PR.

I tried the same thing against o1, but I think DeepSeek R1 did it better. In particular, from the R1 chain of thought:

Wait, but in the model_map, "groq-gemma" maps to "gemma-7b-it". So, perhaps the model_map is needed to map the local model IDs to the actual Groq model names.

But since the model_map is hardcoded, and the API returns available models, perhaps the model_map should be built dynamically from the API response.

Alternatively, perhaps the model_map can be eliminated, and the models are registered based on the fetched models.

Wait, perhaps the model_map is no longer necessary. Instead, when the models are fetched from the API, each model's "id" is the actual model name used in the Groq API. So, when registering the models, the local model ID is "groq-{id}", and the groq_model_id is "id".

(It thought about model_map a lot before finally deciding to eliminate it, which was also my preferred resolution.)

A WebAssembly compiler that fits in a tweet (via) Here's that compiler:

let c=(b,l)=>WebAssembly.instantiate(new Int8Array(

[,97,115,109,1,,,,1,5,1,96,,1,127,3,2,1,,7,4,1,,,,10,

l=(b=b.split .flatMap(t=>t>-1?[65,t]:107+'-*/'.indexOf(t)))

.length+4,1,l-2,,...b,11]))

This article then does a great job of de-obfuscating that code, and uses it to explain a whole bunch of interesting concepts about how WebAssembly actually works.

2024

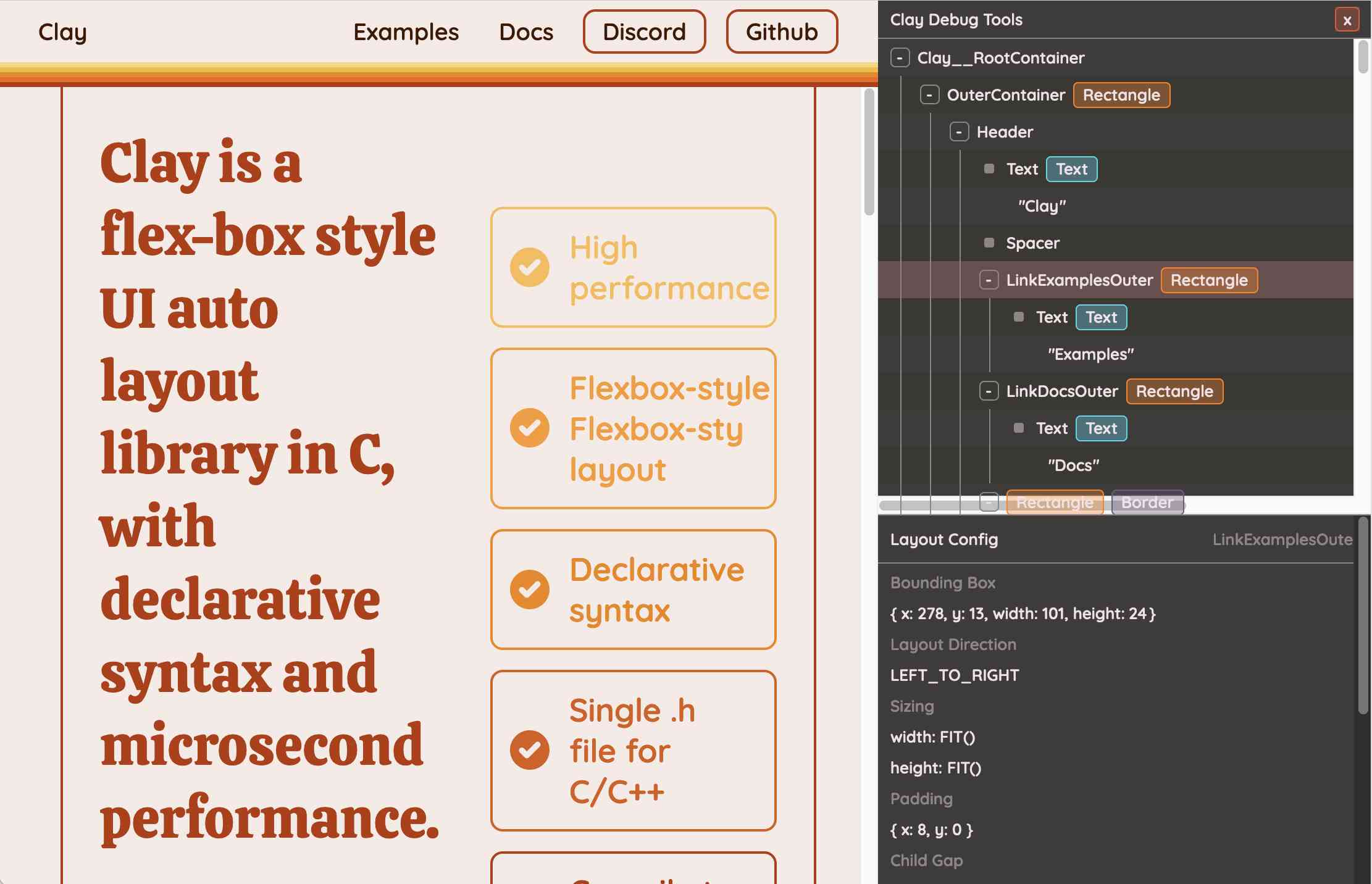

Clay UI library (via) Fascinating project by Nic Barker, who describes Clay like this:

Clay is a flex-box style UI auto layout library in C, with declarative syntax and microsecond performance.

His intro video to the library is outstanding: I learned a ton about how UI layout works from this, and the animated visual explanations are clear, tasteful and really helped land the different concepts:

Clay is a C library delivered in a single ~2000 line clay.h dependency-free header file. It only handles layout calculations: if you want to render the result you need to add an additional rendering layer.

In a fascinating demo of the library, the Clay site itself is rendered using Clay C compiled to WebAssembly! You can even switch between the default HTML renderer and an alternative based on Canvas.

This isn't necessarily a great idea: because the layout is entirely handled using <div> elements positioned using transform: translate(0px, 70px) style CSS attempting to select text across multiple boxes behaves strangely, and it's not clear to me what the accessibility implications are.

Update: Matt Campbell:

The accessibility implications are as serious as you might guess. The links aren't properly labeled, there's no semantic markup such as headings, and since there's a div for every line, continuous reading with a screen reader is choppy, that is, it pauses at the end of every physical line.

It does make for a very compelling demo of what Clay is capable of though, especially when you resize your browser window and the page layout is recalculated in real-time via the Clay WebAssembly bridge.

You can hit "D" on the website and open up a custom Clay debugger showing the hierarchy of layout elements on the page:

This also means that the entire page is defined using C code! Given that, I find the code itself surprisingly readable

void DeclarativeSyntaxPageDesktop() {

CLAY(CLAY_ID("SyntaxPageDesktop"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW(), CLAY_SIZING_FIT({ .min = windowHeight - 50 }) }, .childAlignment = {0, CLAY_ALIGN_Y_CENTER}, .padding = {.x = 50} })) {

CLAY(CLAY_ID("SyntaxPage"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW(), CLAY_SIZING_GROW() }, .childAlignment = { 0, CLAY_ALIGN_Y_CENTER }, .padding = { 32, 32 }, .childGap = 32 }), CLAY_BORDER({ .left = { 2, COLOR_RED }, .right = { 2, COLOR_RED } })) {

CLAY(CLAY_ID("SyntaxPageLeftText"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_PERCENT(0.5) }, .layoutDirection = CLAY_TOP_TO_BOTTOM, .childGap = 8 })) {

CLAY_TEXT(CLAY_STRING("Declarative Syntax"), CLAY_TEXT_CONFIG({ .fontSize = 52, .fontId = FONT_ID_TITLE_56, .textColor = COLOR_RED }));

CLAY(CLAY_ID("SyntaxSpacer"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW({ .max = 16 }) } })) {}

CLAY_TEXT(CLAY_STRING("Flexible and readable declarative syntax with nested UI element hierarchies."), CLAY_TEXT_CONFIG({ .fontSize = 28, .fontId = FONT_ID_BODY_36, .textColor = COLOR_RED }));

CLAY_TEXT(CLAY_STRING("Mix elements with standard C code like loops, conditionals and functions."), CLAY_TEXT_CONFIG({ .fontSize = 28, .fontId = FONT_ID_BODY_36, .textColor = COLOR_RED }));

CLAY_TEXT(CLAY_STRING("Create your own library of re-usable components from UI primitives like text, images and rectangles."), CLAY_TEXT_CONFIG({ .fontSize = 28, .fontId = FONT_ID_BODY_36, .textColor = COLOR_RED }));

}

CLAY(CLAY_ID("SyntaxPageRightImage"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_PERCENT(0.50) }, .childAlignment = {.x = CLAY_ALIGN_X_CENTER} })) {

CLAY(CLAY_ID("SyntaxPageRightImageInner"), CLAY_LAYOUT({ .sizing = { CLAY_SIZING_GROW({ .max = 568 }) } }), CLAY_IMAGE({ .sourceDimensions = {1136, 1194}, .sourceURL = CLAY_STRING("/clay/images/declarative.png") })) {}

}

}

}

}I'm not ready to ditch HTML and CSS for writing my web pages in C compiled to WebAssembly just yet, but as an exercise in understanding layout engines (and a potential tool for building non-web interfaces in the future) this is a really interesting project to dig into.

To clarify here: I don't think the web layout / WebAssembly thing is the key idea behind Clay at all - I think it's a neat demo of the library, but it's not what Clay is for. It's certainly an interesting way to provide a demo of a layout library!

Nic confirms:

You totally nailed it, the fact that you can compile to wasm and run in HTML stemmed entirely from a “wouldn’t it be cool if…” It was designed for my C projects first and foremost!

ChatGPT Canvas can make API requests now, but it’s complicated

Today’s 12 Days of OpenAI release concerned ChatGPT Canvas, a new ChatGPT feature that enables ChatGPT to pop open a side panel with a shared editor in it where you can collaborate with ChatGPT on editing a document or writing code.

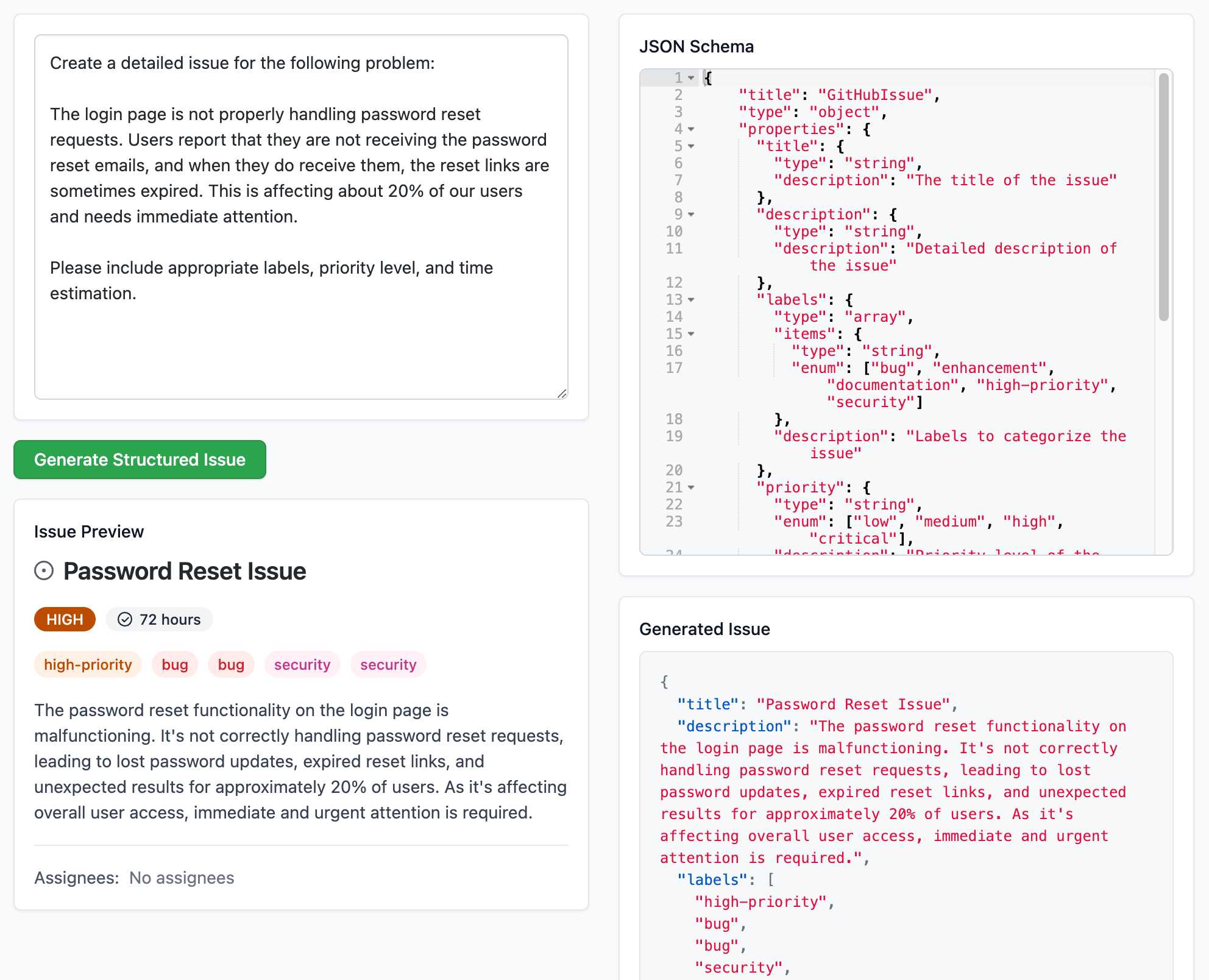

[... 1,116 words]Structured Generation w/ SmolLM2 running in browser & WebGPU (via) Extraordinary demo by Vaibhav Srivastav (VB). Here's Hugging Face's SmolLM2-1.7B-Instruct running directly in a web browser (using WebGPU, so requires Chrome for the moment) demonstrating structured text extraction, converting a text description of an image into a structured GitHub issue defined using JSON schema.

The page loads 924.8MB of model data (according to this script to sum up files in window.caches) and performs everything in-browser. I did not know a model this small could produce such useful results.

Here's the source code for the demo. It's around 200 lines of code, 50 of which are the JSON schema describing the data to be extracted.

The real secret sauce here is web-llm by MLC. This library has made loading and executing prompts through LLMs in the browser shockingly easy, and recently incorporated support for MLC's XGrammar library (also available in Python) which implements both JSON schema and EBNF-based structured output guidance.

Generating documentation from tests using files-to-prompt and LLM. I was experimenting with the wasmtime-py Python library today (for executing WebAssembly programs from inside CPython) and I found the existing API docs didn't quite show me what I wanted to know.

The project has a comprehensive test suite so I tried seeing if I could generate documentation using that:

cd /tmp

git clone https://github.com/bytecodealliance/wasmtime-py

files-to-prompt -e py wasmtime-py/tests -c | \

llm -m claude-3.5-sonnet -s \

'write detailed usage documentation including realistic examples'

More notes in my TIL. You can see the full Claude transcript here - I think this worked really well!

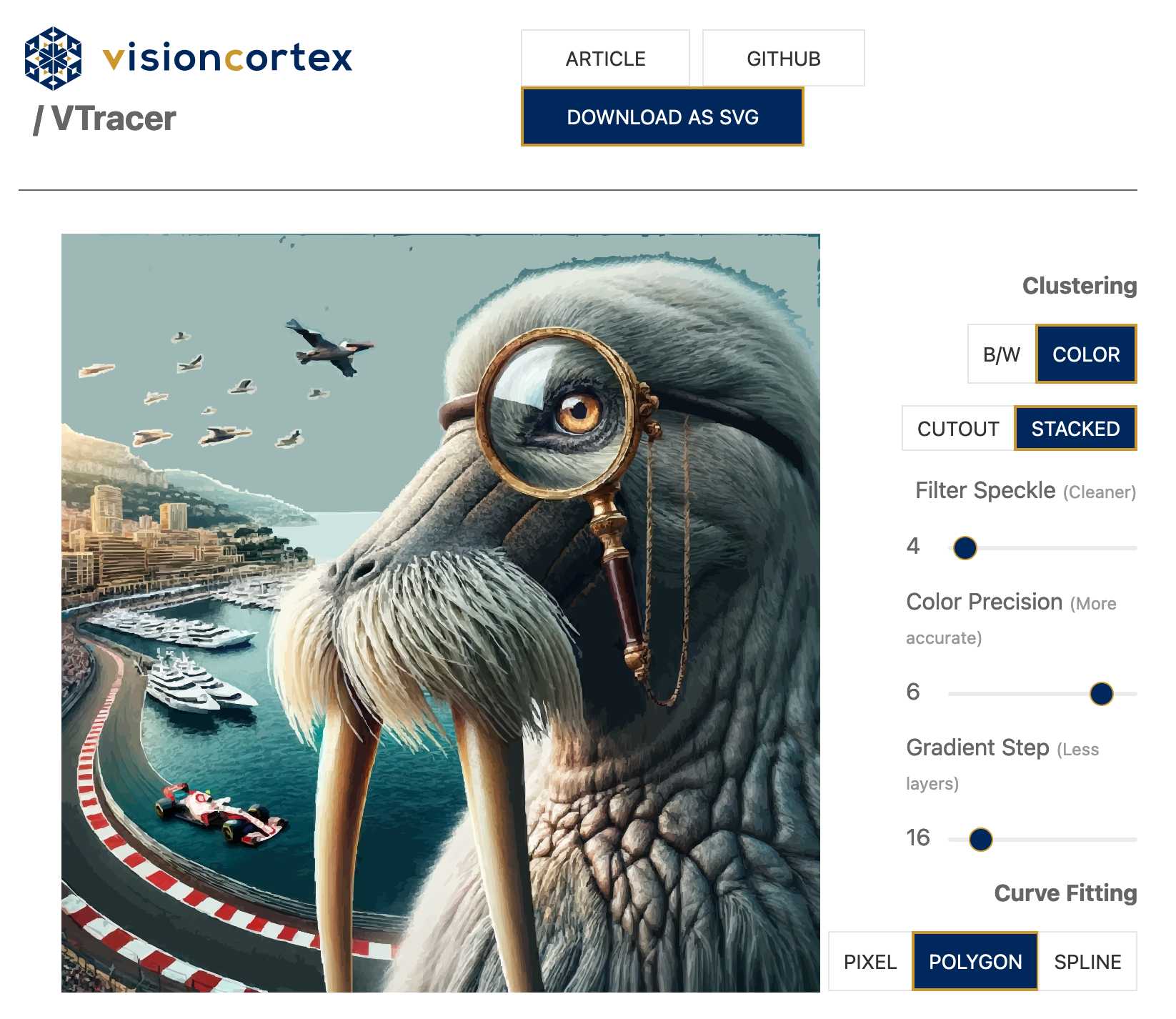

VTracer (via) VTracer is an open source library written in Rust for converting raster images (JPEG, PNG etc) to vector SVG.

This VTracer web app provides access to a WebAssembly compiled version of the library, with a UI that lets you open images, tweak the various options and download the resulting SVG.

I heard about this today on Twitter in a reply to my tweet demonstrating a much, much simpler Image to SVG tool I built with the help of Claude and the handy imagetracerjs library by András Jankovics.

llama-3.2-webgpu (via) Llama 3.2 1B is a really interesting models, given its 128,000 token input and its tiny size (barely more than a GB).

This page loads a 1.24GB q4f16 ONNX build of the Llama-3.2-1B-Instruct model and runs it with a React-powered chat interface directly in the browser, using Transformers.js and WebGPU. Source code for the demo is here.

It worked for me just now in Chrome; in Firefox and Safari I got a “WebGPU is not supported by this browser” error message.

Notes on running Go in the browser with WebAssembly (via) Neat, concise tutorial by Eli Bendersky on compiling Go applications that can then be loaded into a browser using WebAssembly and integrated with JavaScript. Go functions can be exported to JavaScript like this:

js.Global().Set("calcHarmonic", jsCalcHarmonic)

And Go code can even access the DOM using a pattern like this:

doc := js.Global().Get("document")

inputElement := doc.Call("getElementById", "timeInput")

input := inputElement.Get("value")

Bundling the WASM Go runtime involves a 2.5MB file load, but there’s also a TinyGo alternative which reduces that size to a fourth.

MiniJinja: Learnings from Building a Template Engine in Rust (via) Armin Ronacher's MiniJinja is his re-implemenation of the Python Jinja2 (originally built by Armin) templating language in Rust.

It's nearly three years old now and, in Armin's words, "it's at almost feature parity with Jinja2 and quite enjoyable to use".

The WebAssembly compiled demo in the MiniJinja Playground is fun to try out. It includes the ability to output instructions, so you can see how this:

<ul>

{%- for item in nav %}

<li>{{ item.title }}</a>

{%- endfor %}

</ul>Becomes this:

0 EmitRaw "<ul>"

1 Lookup "nav"

2 PushLoop 1

3 Iterate 11

4 StoreLocal "item"

5 EmitRaw "\n <li>"

6 Lookup "item"

7 GetAttr "title"

8 Emit

9 EmitRaw "</a>"

10 Jump 3

11 PopFrame

12 EmitRaw "\n</ul>"No More Blue Fridays (via) Brendan Gregg: "In the future, computers will not crash due to bad software updates, even those updates that involve kernel code. In the future, these updates will push eBPF code."

New-to-me things I picked up from this:

- eBPF - a technology I had thought was unique to the a Linux kernel - is coming Windows!

- A useful mental model to have for eBPF is that it provides a WebAssembly-style sandbox for kernel code.

- eBPF doesn't stand for "extended Berkeley Packet Filter" any more - that name greatly understates its capabilities and has been retired. More on that in the eBPF FAQ.

- From this Hacker News thread eBPF programs can be analyzed before running despite the halting problem because eBPF only allows verifiably-halting programs to run.

marimo.app. The Marimo reactive notebook (previously) - a Python notebook that's effectively a cross between Jupyter and Observable - now also has a version that runs entirely in your browser using WebAssembly and Pyodide. Here's the documentation.

llama.ttf (via) llama.ttf is "a font file which is also a large language model and an inference engine for that model".

You can see it kick into action at 8m28s in this video, where creator Søren Fuglede Jørgensen types "Once upon a time" followed by dozens of exclamation marks, and those exclamation marks then switch out to render a continuation of the story. But... when they paste the code out of the editor again it shows as the original exclamation marks were preserved - the LLM output was presented only in the way they were rendered.

The key trick here is that the font renderer library HarfBuzz (used by Firefox, Chrome, Android, GNOME and more) added a new WebAssembly extension in version 8.0 last year, which is powerful enough to run a full LLM based on the tinyllama-15M model - which fits in a 60MB font file.

(Here's a related demo from Valdemar Erk showing Tetris running in a WASM font, at 22m56s in this video.)

The source code for llama.ttf is available on GitHub.

Experimenting with local alt text generation in Firefox Nightly (via) The PDF editor in Firefox (confession: I did not know Firefox ships with a PDF editor) is getting an experimental feature that can help suggest alt text for images for the human editor to then adapt and improve on.

This is a great application of AI, made all the more interesting here because Firefox will run a local model on-device for this, using a custom trained model they describe as "our 182M parameters model using a Distilled version of GPT-2 alongside a Vision Transformer (ViT) image encoder".

The model uses WebAssembly with ONNX running in Transfomers.js, and will be downloaded the first time the feature is put to use.